20

Matthew DeCarlo

Chapter Outline

- Where do I start with quantitative data analysis? (12 minute read)

- Measures of central tendency (17 minute read, including 5-minute video)

- Frequencies and variability (13 minute read)

Content warning: examples in this chapter contain references to depression and self-esteem.

People often dread quantitative data analysis because—oh no—it’s math. And true, you’re going to have to work with numbers. For years, I thought I was terrible at math, and then I started working with data and statistics, and it turned out I had a real knack for it. (I have a statistician friend who claims statistics is not math, which is a math joke that’s way over my head, but there you go.) This chapter, and the subsequent quantitative analysis chapters, are going to focus on helping you understand descriptive statistics and a few statistical tests, NOT calculate them (with a couple of exceptions). Future research classes will focus on teaching you to calculate these tests for yourself. So take a deep breath and clear your mind of any doubts about your ability to understand and work with numerical data.

In this chapter, we’re going to discuss the first step in analyzing your quantitative data: univariate data analysis. Univariate data analysis is a quantitative method in which a variable is examined individually to determine its distribution, or the way the scores are distributed across the levels of that variable. When we talk about levels, what we are talking about are the possible values of the variable—like a participant’s age, income or gender. (Note that this is different than our earlier discussion in Chaper 10 of levels of measurement, but the level of measurement of your variables absolutely affects what kinds of analyses you can do with it.) Univariate analysis is non-relational, which just means that we’re not looking into how our variables relate to each other. Instead, we’re looking at variables in isolation to try to understand them better. For this reason, univariate analysis is best for descriptive research questions.

So when do you use univariate data analysis? Always! It should be the first thing you do with your quantitative data, whether you are planning to move on to more sophisticated statistical analyses or are conducting a study to describe a new phenomenon. You need to understand what the values of each variable look like—what if one of your variables has a lot of missing data because participants didn’t answer that question on your survey? What if there isn’t much variation in the gender of your sample? These are things you’ll learn through univariate analysis.

14.1 Where do I start with quantitative data analysis?

Learning Objectives

Learners will be able to…

- Define and construct a data analysis plan

- Define key data management terms—variable name, data dictionary, primary and secondary data, observations/cases

No matter how large or small your data set is, quantitative data can be intimidating. There are a few ways to make things manageable for yourself, including creating a data analysis plan and organizing your data in a useful way. We’ll discuss some of the keys to these tactics below.

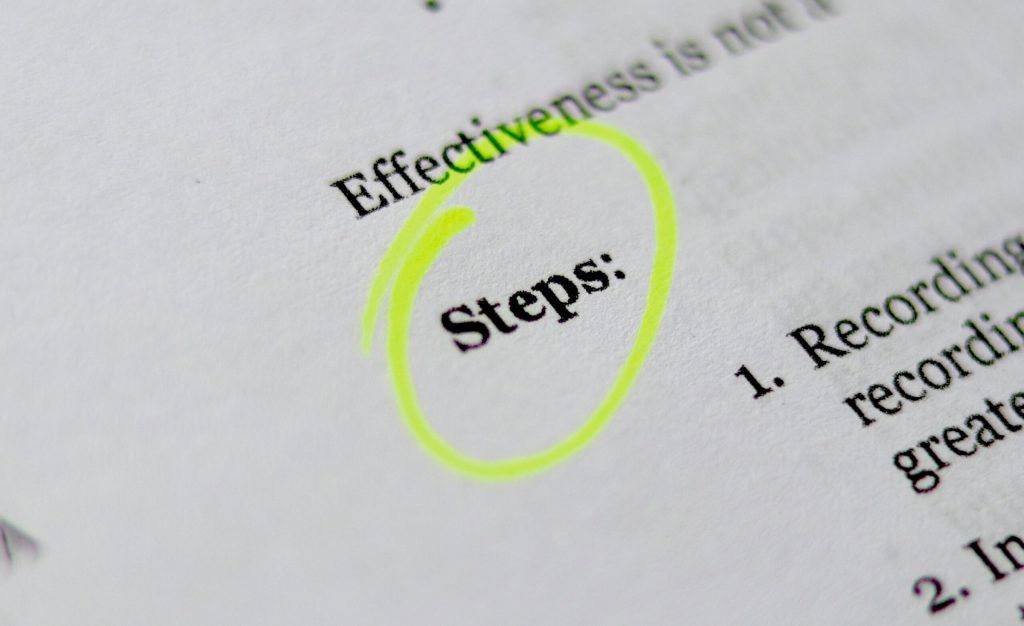

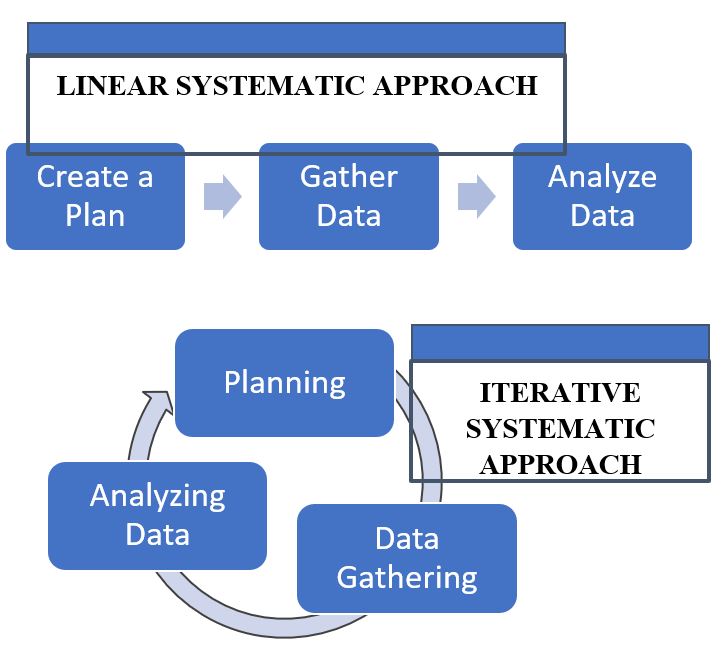

The data analysis plan

As part of planning for your research, and to help keep you on track and make things more manageable, you should come up with a data analysis plan. You’ve basically been working on doing this in writing your research proposal so far. A data analysis plan is an ordered outline that includes your research question, a description of the data you are going to use to answer it, and the exact step-by-step analyses, that you plan to run to answer your research question. This last part—which includes choosing your quantitative analyses—is the focus of this and the next two chapters of this book.

A basic data analysis plan might look something like what you see in Table 14.1. Don’t panic if you don’t yet understand some of the statistical terms in the plan; we’re going to delve into them throughout the next few chapters. Note here also that this is what operationalizing your variables and moving through your research with them looks like on a basic level.

| Research question: What is the relationship between a person’s race and their likelihood to graduate from high school? |

| Data: Individual-level U.S. American Community Survey data for 2017 from IPUMS, which includes race/ethnicity and other demographic data (i.e., educational attainment, family income, employment status, citizenship, presence of both parents, etc.). Only including individuals for which race and educational attainment data is available. |

Steps in Data Analysis Plan

|

An important point to remember is that you should never get stuck on using a particular statistical method because you or one of your co-researchers thinks it’s cool or it’s the hot thing in your field right now. You should certainly go into your data analysis plan with ideas, but in the end, you need to let your research question and the actual content of your data guide what statistical tests you use. Be prepared to be flexible if your plan doesn’t pan out because the data is behaving in unexpected ways.

Managing your data

Whether you’ve collected your own data or are using someone else’s data, you need to make sure it is well-organized in a database in a way that’s actually usable. “Database” can be kind of a scary word, but really, I just mean an Excel spreadsheet or a data file in whatever program you’re using to analyze your data (like SPSS, SAS, or r). (I would avoid Excel if you’ve got a very large data set—one with millions of records or hundreds of variables—because it gets very slow and can only handle a certain number of cases and variables, depending on your version. But if your data set is smaller and you plan to keep your analyses simple, you can definitely get away with Excel.) Your database or data set should be organized with variables as your columns and observations/cases as your rows. For example, let’s say we did a survey on ice cream preferences and collected the following information in Table 14.2:

| Name | Age | Gender | Hometown | Fav_Ice_Cream |

| Tom | 54 | 0 | 1 | Rocky Road |

| Jorge | 18 | 2 | 0 | French Vanilla |

| Melissa | 22 | 1 | 0 | Espresso |

| Amy | 27 | 1 | 0 | Black Cherry |

There are a few key data management terms to understand:

- Variable name: Just what it sounds like—the name of your variable. Make sure this is something useful, short and, if you’re using something other than Excel, all one word. Most statistical programs will automatically rename variables for you if they aren’t one word, but the names are usually a little ridiculous and long.

- Observations/cases: The rows in your data set. In social work, these are often your study participants (people), but can be anything from census tracts to black bears to trains. When we talk about sample size, we’re talking about the number of observations/cases. In our mini data set, each person is an observation/case.

- Primary data: Data you have collected yourself.

- Secondary data: Data someone else has collected that you have permission to use in your research. For example, for my student research project in my MSW program, I used data from a local probation program to determine if a shoplifting prevention group was reducing the rate at which people were re-offending. I had data on who participated in the program and then received their criminal history six months after the end of their probation period. This was secondary data I used to determine whether the shoplifting prevention group had any effect on an individual’s likelihood of re-offending.

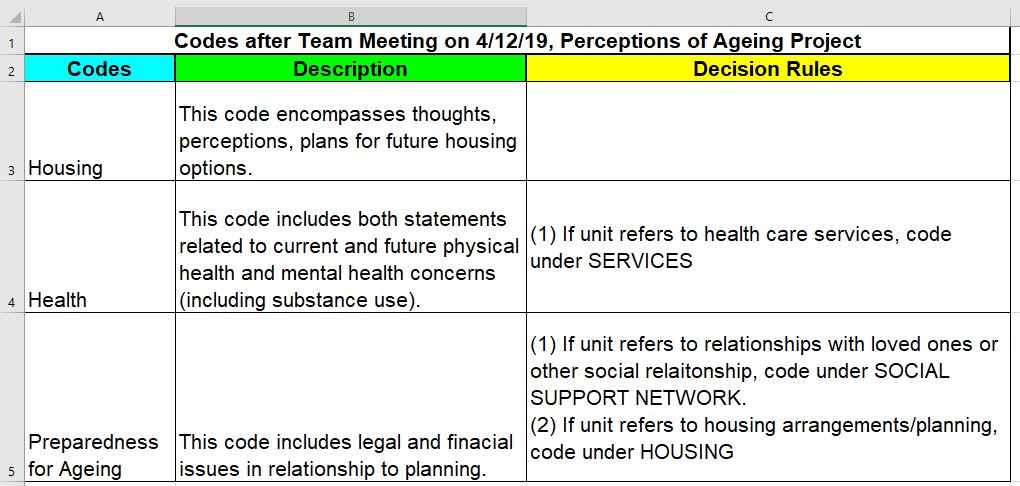

- Data dictionary (sometimes called a code book): This is the document where you list your variable names, what the variables actually measure or represent, what each of the values of the variable mean if the meaning isn’t obvious (i.e., if there are numbers assigned to gender), the level of measurement and anything special to know about the variables (for instance, the source if you mashed two data sets together). If you’re using secondary data, the data dictionary should be available to you.

When considering what data you might want to collect as part of your project, there are two important considerations that can create dilemmas for researchers. You might only get one chance to interact with your participants, so you must think comprehensively in your planning phase about what information you need and collect as much relevant data as possible. At the same time, though, especially when collecting sensitive information, you need to consider how onerous the data collection is for participants and whether you really need them to share that information. Just because something is interesting to us doesn’t mean it’s related enough to our research question to chase it down. Work with your research team and/or faculty early in your project to talk through these issues before you get to this point. And if you’re using secondary data, make sure you have access to all the information you need in that data before you use it.

Let’s take that mini data set we’ve got up above and I’ll show you what your data dictionary might look like in Table 14.3.

| Variable name | Description | Values/Levels | Level of measurement | Notes |

| Name | Participant’s first name | n/a | n/a | First names only. If names appear more than once, a random number has been attached to the end of the name to distinguish. |

| Age | Participant’s age at time of survey | n/a | Interval/Ratio | Self-reported |

| Gender | Participant’s self-identified gender | 0=cisgender female1=cisgender male2=non-binary3=transgender female4=transgender male5=another gender | Nominal | Self-reported |

| Hometown | Participant’s hometown—this town or another town | 0=This town

1=Another town |

Nominal | Self-reported |

| Fav_Ice_Cream | Participant’s favorite ice cream | n/a | n/a | Self-reported |

Key Takeaways

- Getting organized at the beginning of your project with a data analysis plan will help keep you on track. Data analysis plans should include your research question, a description of your data, and a step-by-step outline of what you’re going to do with it.

- Be flexible with your data analysis plan—sometimes data surprises us and we have to adjust the statistical tests we are using.

- Always make a data dictionary or, if using secondary data, get a copy of the data dictionary so you (or someone else) can understand the basics of your data.

Exercises

- Make a data analysis plan for your project. Remember this should include your research question, a description of the data you will use, and a step-by-step outline of what you’re going to do with your data once you have it, including statistical tests (non-relational and relational) that you plan to use. You can do this exercise whether you’re using quantitative or qualitative data! The same principles apply.

- Make a data dictionary for the data you are proposing to collect as part of your study. You can use the example above as a template.

14.2 Measures of central tendency

Learning Objectives

Learners will be able to…

- Explain measures of central tendency—mean, median and mode—and when to use them to describe your data

- Explain the importance of examining the range of your data

- Apply the appropriate measure of central tendency to a research problem or question

A measure of central tendency is one number that can give you an idea about the distribution of your data. The video below gives a more detailed introduction to central tendency. Then we’ll talk more specifically about our three measures of central tendency—mean, median and mode.

One quick note: the narrator in the video mentions skewness and kurtosis. Basically, these refer to a particular shape for a distribution when you graph it out. That gets into some more advanced multivariate analysis that we aren’t tackling in this book, so just file them away for a more advanced class, if you ever take on additional statistics coursework.

There are three key measures of central tendency, which we’ll go into now.

Mean

The mean, also called the average, is calculated by adding all your cases and dividing the sum by the number of cases. You’ve undoubtedly calculated a mean at some point in your life. The mean is the most widely used measure of central tendency because it’s easy to understand and calculate. It can only be used with interval/ratio variables, like age, test scores or years of post-high school education. (If you think about it, using it with a nominal or ordinal variable doesn’t make much sense—why do we care about the average of our numerical values we assigned to certain races?)

The biggest drawback of using the mean is that it’s extremely sensitive to outliers, or extreme values in your data. And the smaller your data set is, the more sensitive your mean is to these outliers. One thing to remember about outliers—they are not inherently bad, and can sometimes contain really important information. Don’t automatically discard them because they skew your data.

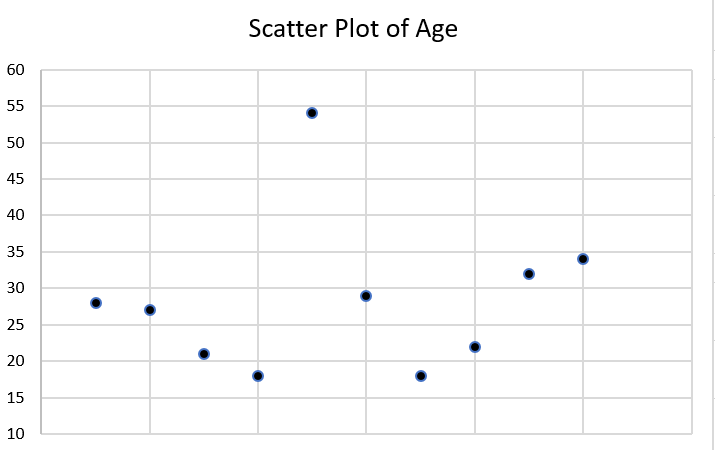

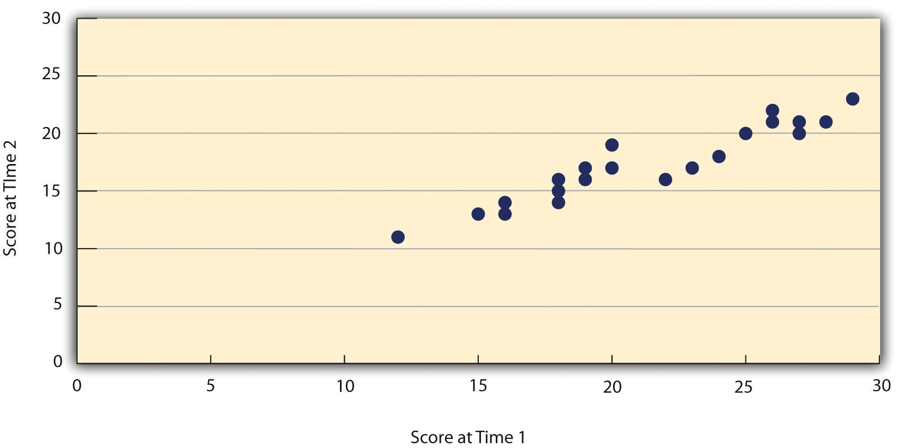

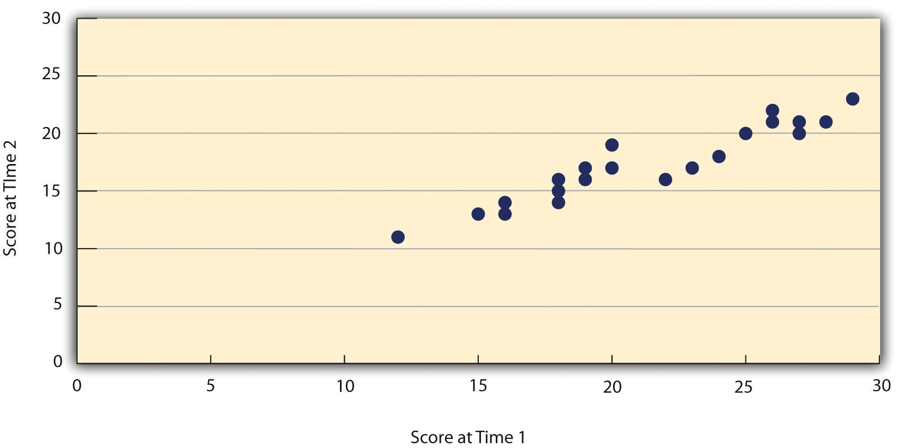

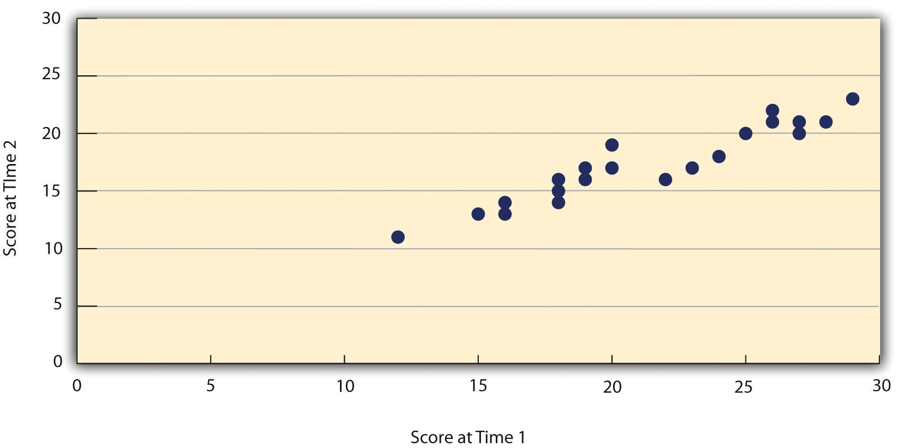

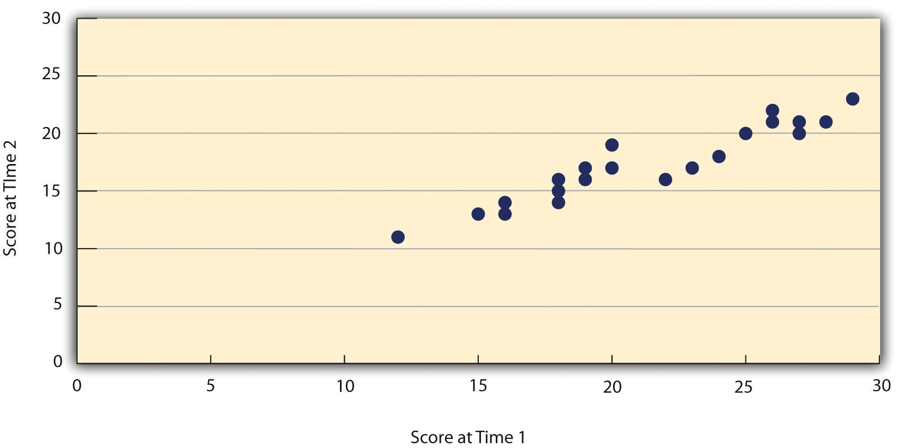

Let’s take a minute to talk about how to locate outliers in your data. If your data set is very small, you can just take a look at it and see outliers. But in general, you’re probably going to be working with data sets that have at least a couple dozen cases, which makes just looking at your values to find outliers difficult. The best way to quickly look for outliers is probably to make a scatter plot with excel or whatever database management program you’re using.

Let’s take a very small data set as an example. Oh hey, we had one before! I’ve re-created it in Table 14.5. We’re going to add some more cases to it so it’s a little easier to illustrate what we’re doing.

| Name | Age | Gender | Hometown | Fav_Ice_Cream |

| Tom | 54 | 0 | 1 | Rocky Road |

| Jorge | 18 | 2 | 0 | French Vanilla |

| Melissa | 22 | 1 | 0 | Espresso |

| Amy | 27 | 1 | 0 | Black Cherry |

| Akiko | 28 | 3 | 0 | Chocolate |

| Michael | 32 | 0 | 1 | Pistachio |

| Jess | 29 | 1 | 0 | Chocolate |

| Subasri | 34 | 1 | 0 | Vanilla Bean |

| Brian | 21 | 0 | 1 | Moose Tracks |

| Crystal | 18 | 1 | 0 | Strawberry |

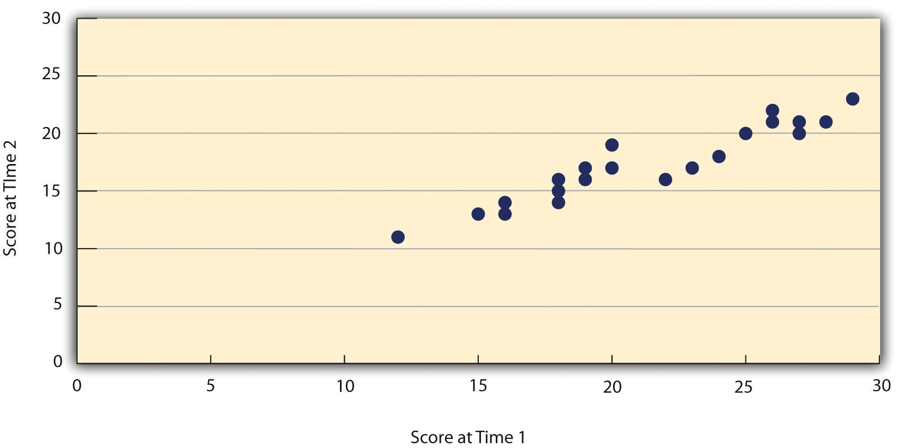

Let’s say we’re interested in knowing more about the distribution of participant age. Let’s see a scatterplot of age (Figure 14.1). On our y-axis (the vertical one) is the value of age, and on our x-axis (the horizontal one) is the frequency of each age, or the number of times it appears in our data set.

Do you see any outliers in the scatter plot? There is one participant who is significantly older than the rest at age 54. Let’s think about what happens when we calculate our mean with and without that outlier. Complete the two exercises below by using the ages listed in our mini-data set in this section.

Next, let’s try it without the outlier.

With our outlier, the average age of our participants is 28, and without it, the average age is 25. That might not seem enormous, but it illustrates the effects of outliers on the mean.

Just because Tom is an outlier at age 54 doesn’t mean you should exclude him. The most important thing about outliers is to think critically about them and how they could affect your analysis. Finding outliers should prompt a couple of questions. First, could the data have been entered incorrectly? Is Tom actually 24, and someone just hit the “5” instead of the “2” on the number pad? What might be special about Tom that he ended up in our group, given how that he is different? Are there other relevant ways in which Tom differs from our group (is he an outlier in other ways)? Does it really matter than Tom is much older than our other participants? If we don’t think age is a relevant factor in ice cream preferences, then it probably doesn’t. If we do, then we probably should have made an effort to get a wider range of ages in our participants.

Median

The median (also called the 50th percentile) is the middle value when all our values are placed in numerical order. If you have five values and you put them in numerical order, the third value will be the median. When you have an even number of values, you’ll have to take the average of the middle two values to get the median. So, if you have 6 values, the average of values 3 and 4 will be the median. Keep in mind that for large data sets, you’re going to want to use either Excel or a statistical program to calculate the median—otherwise, it’s nearly impossible logistically.

Like the mean, you can only calculate the median with interval/ratio variables, like age, test scores or years of post-high school education. The median is also a lot less sensitive to outliers than the mean. While it can be more time intensive to calculate, the median is preferable in most cases to the mean for this reason. It gives us a more accurate picture of where the middle of our distribution sits in most cases. In my work as a policy analyst and researcher, I rarely, if ever, use the mean as a measure of central tendency. Its main value for me is to compare it to the median for statistical purposes. So get used to the median, unless you’re specifically asked for the mean. (When we talk about t-tests in the next chapter, we’ll talk about when the mean can be useful.)

Let’s go back to our little data set and calculate the median age of our participants (Table 14.6).

| Name | Age | Gender | Hometown | Fav_Ice_Cream |

| Tom | 54 | 0 | 1 | Rocky Road |

| Jorge | 18 | 2 | 0 | French Vanilla |

| Melissa | 22 | 1 | 0 | Espresso |

| Amy | 27 | 1 | 0 | Black Cherry |

| Akiko | 28 | 3 | 0 | Chocolate |

| Michael | 32 | 0 | 1 | Pistachio |

| Jess | 29 | 1 | 0 | Chocolate |

| Subasri | 34 | 1 | 0 | Vanilla Bean |

| Brian | 21 | 0 | 1 | Moose Tracks |

| Crystal | 18 | 1 | 0 | Strawberry |

Remember, to calculate the median, you put all the values in numerical order and take the number in the middle. When there’s an even number of values, take the average of the two middle values.

What happens if we remove Tom, the outlier?

With Tom in our group, the median age is 27.5, and without him, it’s 27. You can see that the median was far less sensitive to him being included in our data than the mean was.

Mode

The mode of a variable is the most commonly occurring value. While you can calculate the mode for interval/ratio variables, it’s mostly useful when examining and describing nominal or ordinal variables. Think of it this way—do we really care that there are two people with an income of $38,000 per year, or do we care that these people fall into a certain category related to that value, like above or below the federal poverty level?

Let’s go back to our ice cream survey (Table 14.7).

| Name | Age | Gender | Hometown | Fav_Ice_Cream |

| Tom | 54 | 0 | 1 | Rocky Road |

| Jorge | 18 | 2 | 0 | French Vanilla |

| Melissa | 22 | 1 | 0 | Espresso |

| Amy | 27 | 1 | 0 | Black Cherry |

| Akiko | 28 | 3 | 0 | Chocolate |

| Michael | 32 | 0 | 1 | Pistachio |

| Jess | 29 | 1 | 0 | Chocolate |

| Subasri | 34 | 1 | 0 | Vanilla Bean |

| Brian | 21 | 0 | 1 | Moose Tracks |

| Crystal | 18 | 1 | 0 | Strawberry |

We can use the mode for a few different variables here: gender, hometown and fav_ice_cream. The cool thing about the mode is that you can use it for numeric/quantitative and text/quantitative variables.

So let’s find some modes. For hometown—or whether the participant’s hometown is the one in which the survey was administered or not—the mode is 0, or “no” because that’s the most common answer. For gender, the mode is 0, or “female.” And for fav_ice_cream, the mode is Chocolate, although there’s a lot of variation there. Sometimes, you may have more than one mode, which is still useful information.

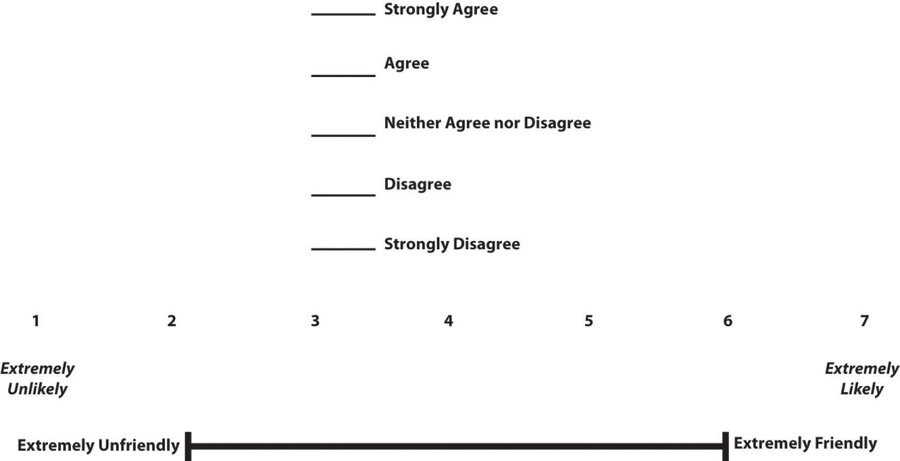

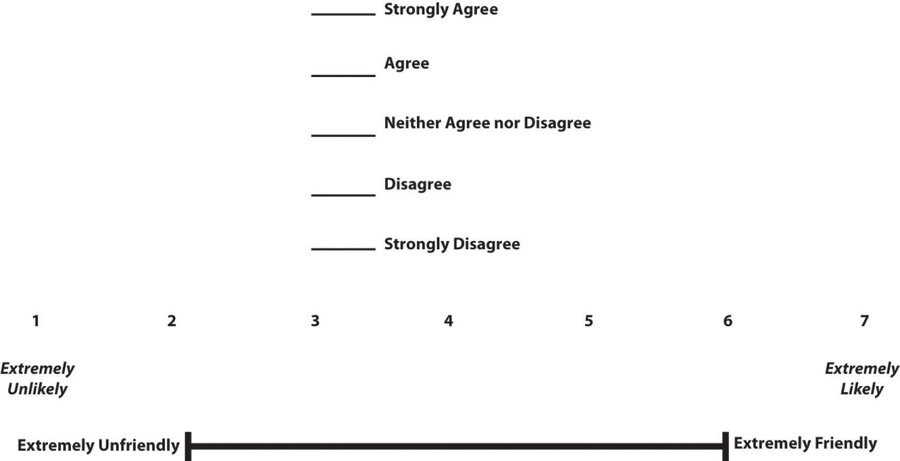

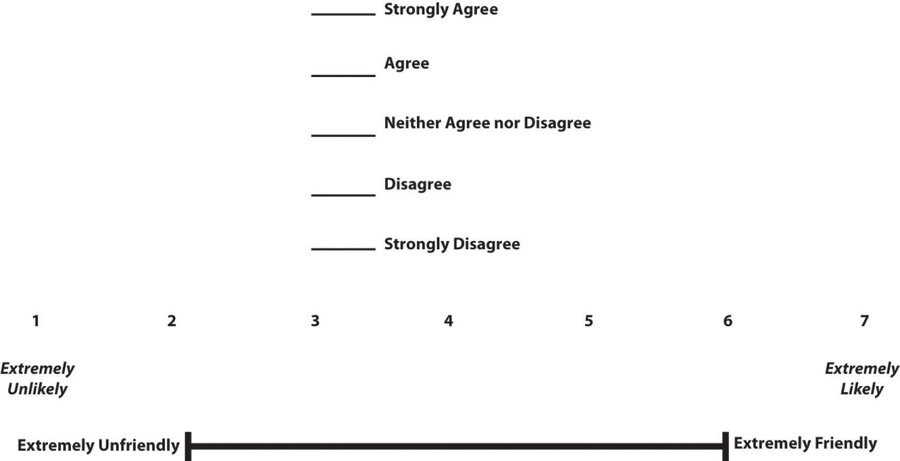

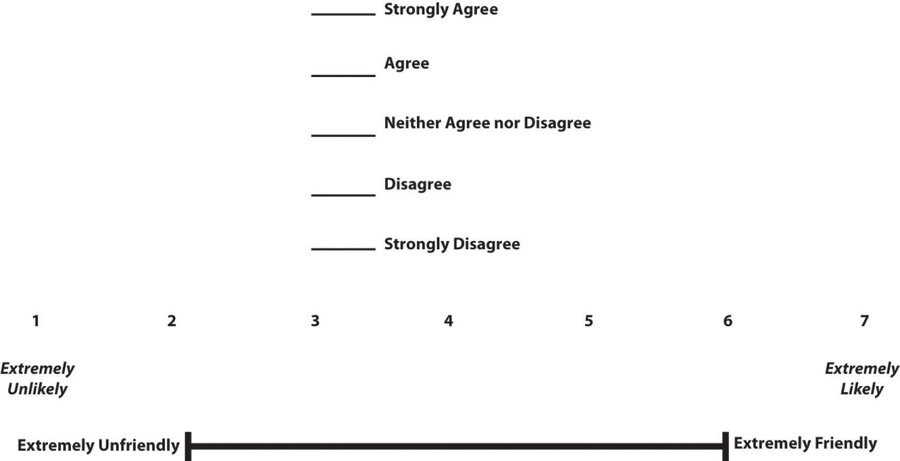

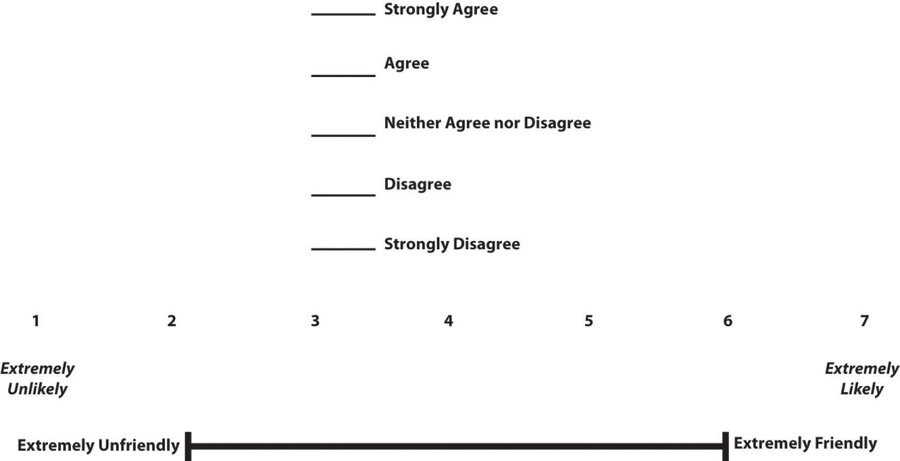

One final thing I want to note about these three measures of central tendency: if you’re using something like a ranking question or a Likert scale, depending on what you’re measuring, you might use a mean or median, even though these look like they will only spit out ordinal variables. For example, say you’re a car designer and want to understand what people are looking for in new cars. You conduct a survey asking participants to rank the characteristics of a new car in order of importance (an ordinal question). The most commonly occurring answer—the mode—really tells you the information you need to design a car that people will want to buy. On the flip side, if you have a scale of 1 through 5 measuring a person’s satisfaction with their most recent oil change, you may want to know the mean score because it will tell you, relative to most or least satisfied, where most people fall in your survey. To know what’s most helpful, think critically about the question you want to answer and about what the actual values of your variable can tell you.

Key Takeaways

- The mean is the average value for a variable, calculated by adding all values and dividing the total by the number of cases. While the mean contains useful information about a variable’s distribution, it’s also susceptible to outliers, especially with small data sets.

- In general, the mean is most useful with interval/ratio variables.

- The median, or 50th percentile, is the exact middle of our distribution when the values of our variable are placed in numerical order. The median is usually a more accurate measurement of the middle of our distribution because outliers have a much smaller effect on it.

- In general, the median is only useful with interval/ratio variables.

- The mode is the most commonly occurring value of our variable. In general, it is only useful with nominal or ordinal variables.

Exercises

- Say you want to know the income of the typical participant in your study. Which measure of central tendency would you use? Why?

- Find an interval/ratio variable and calculate the mean and median. Make a scatter plot and look for outliers.

- Find a nominal variable and calculate the mode.

14.3 Frequencies and variability

Learning Objectives

Learners will be able to…

- Define descriptive statistics and understand when to use these methods.

- Produce and describe visualizations to report quantitative data.

Descriptive statistics refer to a set of techniques for summarizing and displaying data. We’ve already been through the measures of central tendency, (which are considered descriptive statistics) which got their own chapter because they’re such a big topic. Now, we’re going to talk about other descriptive statistics and ways to visually represent data.

Frequency tables

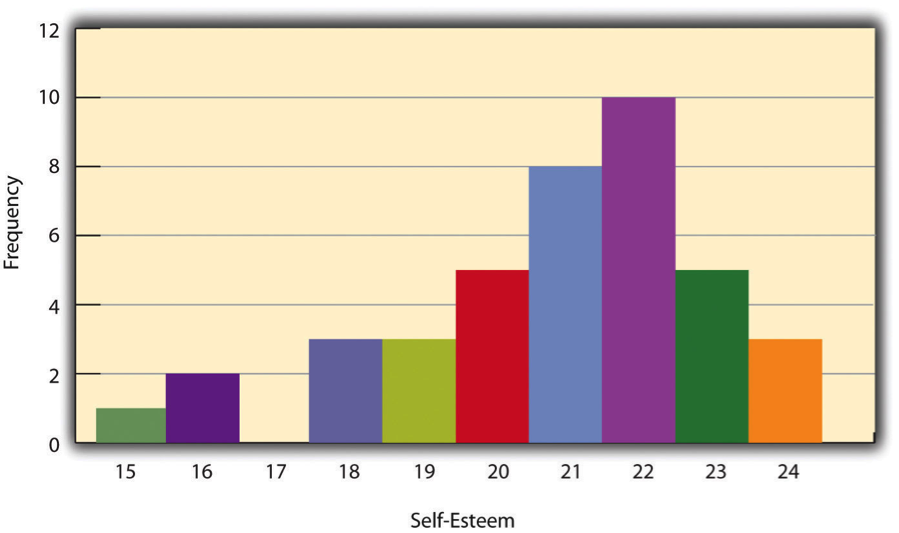

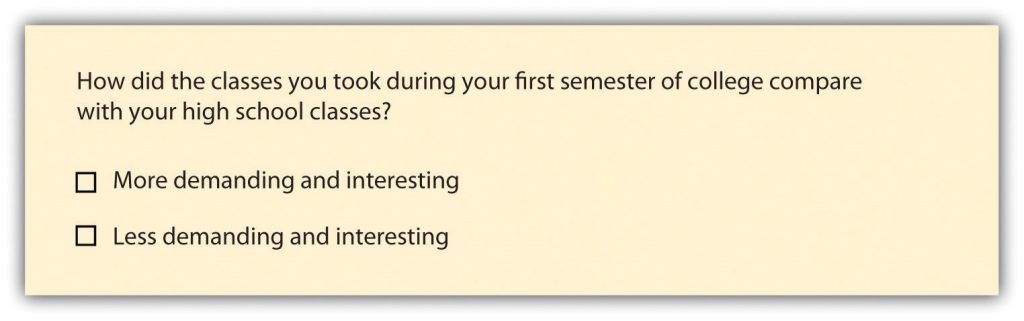

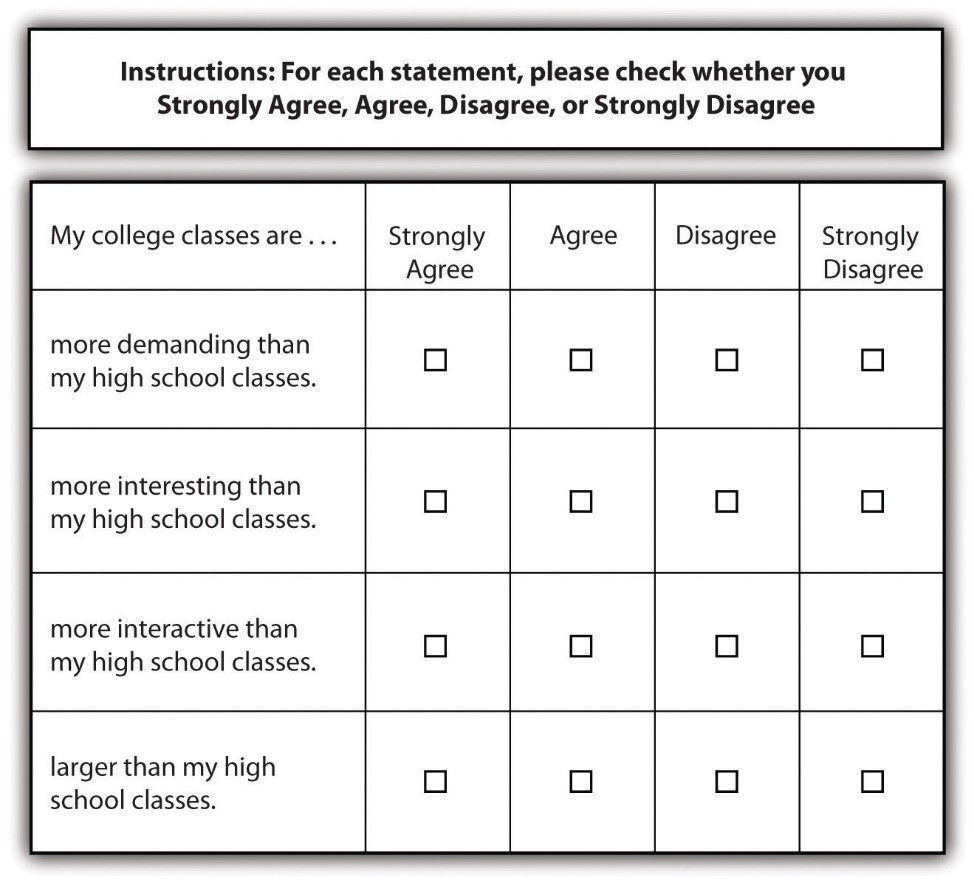

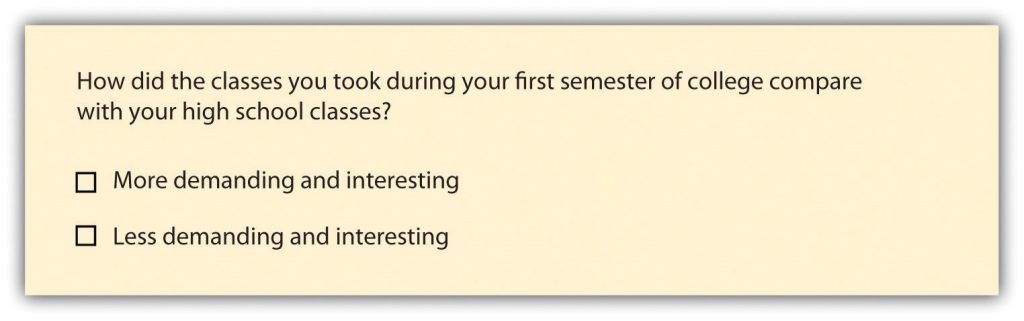

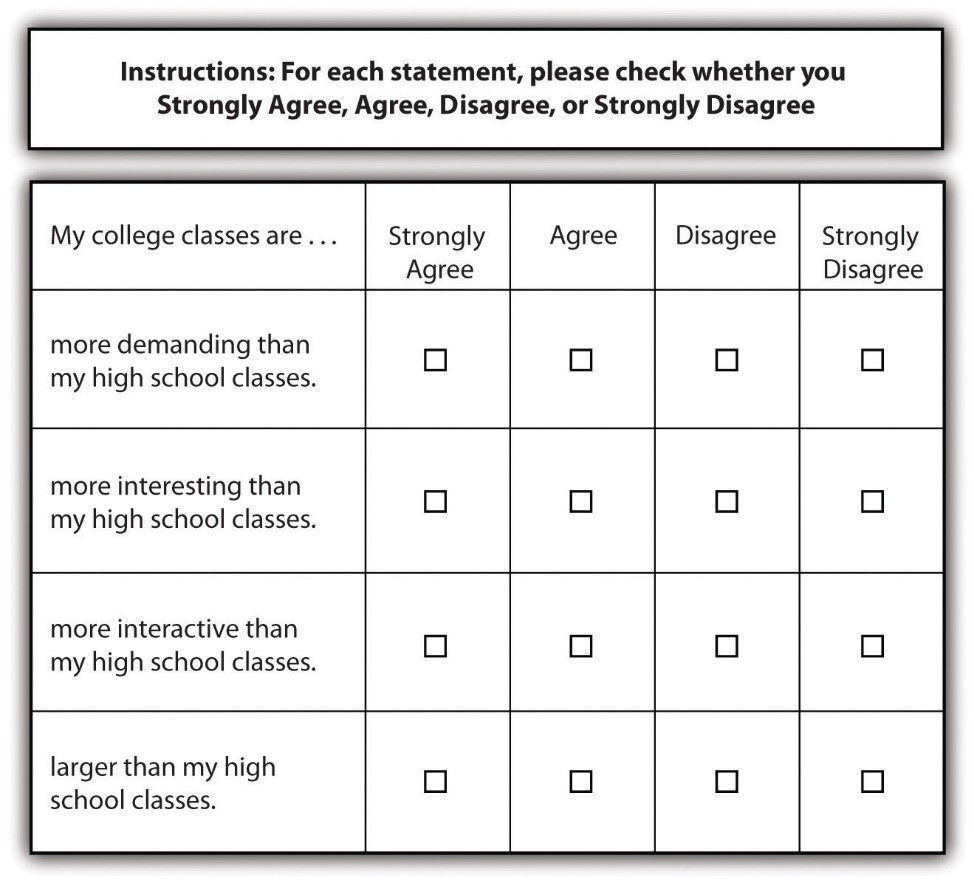

One way to display the distribution of a variable is in a frequency table. Table 14.2, for example, is a frequency table showing a hypothetical distribution of scores on the Rosenberg Self-Esteem Scale for a sample of 40 college students. The first column lists the values of the variable—the possible scores on the Rosenberg scale—and the second column lists the frequency of each score. This table shows that there were three students who had self-esteem scores of 24, five who had self-esteem scores of 23, and so on. From a frequency table like this, one can quickly see several important aspects of a distribution, including the range of scores (from 15 to 24), the most and least common scores (22 and 17, respectively), and any extreme scores that stand out from the rest.

| Self-esteem score (out of 30) | Frequency |

| 24 | 3 |

| 23 | 5 |

| 22 | 10 |

| 21 | 8 |

| 20 | 5 |

| 19 | 3 |

| 18 | 3 |

| 17 | 0 |

| 16 | 2 |

| 15 | 1 |

There are a few other points worth noting about frequency tables. First, the levels listed in the first column usually go from the highest at the top to the lowest at the bottom, and they usually do not extend beyond the highest and lowest scores in the data. For example, although scores on the Rosenberg scale can vary from a high of 30 to a low of 0, Table 14.8 only includes levels from 24 to 15 because that range includes all the scores in this particular data set. Second, when there are many different scores across a wide range of values, it is often better to create a grouped frequency table, in which the first column lists ranges of values and the second column lists the frequency of scores in each range. Table 14.9, for example, is a grouped frequency table showing a hypothetical distribution of simple reaction times for a sample of 20 participants. In a grouped frequency table, the ranges must all be of equal width, and there are usually between five and 15 of them. Finally, frequency tables can also be used for nominal or ordinal variables, in which case the levels are category labels. The order of the category labels is somewhat arbitrary, but they are often listed from the most frequent at the top to the least frequent at the bottom.

| Reaction time (ms) | Frequency |

| 241–260 | 1 |

| 221–240 | 2 |

| 201–220 | 2 |

| 181–200 | 9 |

| 161–180 | 4 |

| 141–160 | 2 |

Histograms

A histogram is a graphical display of a distribution. It presents the same information as a frequency table but in a way that is grasped more quickly and easily. The histogram in Figure 14.2 presents the distribution of self-esteem scores in Table 14.8. The x-axis (the horizontal one) of the histogram represents the variable and the y-axis (the vertical one) represents frequency. Above each level of the variable on the x-axis is a vertical bar that represents the number of individuals with that score. When the variable is quantitative, as it is in this example, there is usually no gap between the bars. When the variable is nominal or ordinal, however, there is usually a small gap between them. (The gap at 17 in this histogram reflects the fact that there were no scores of 17 in this data set.)

Distribution shapes

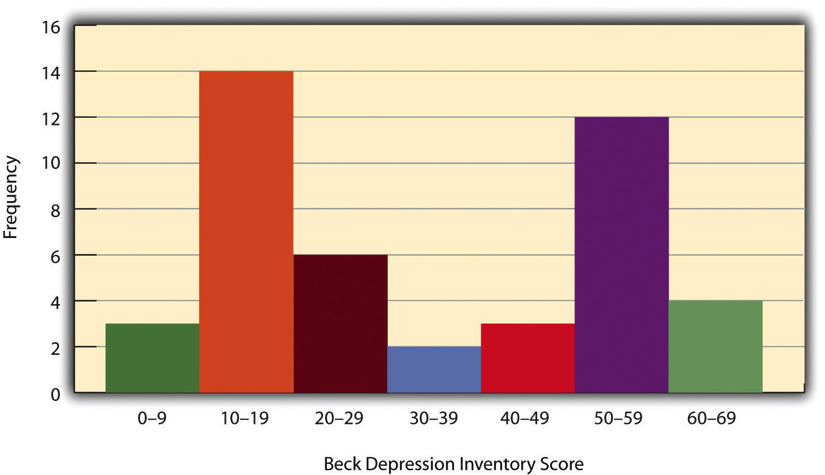

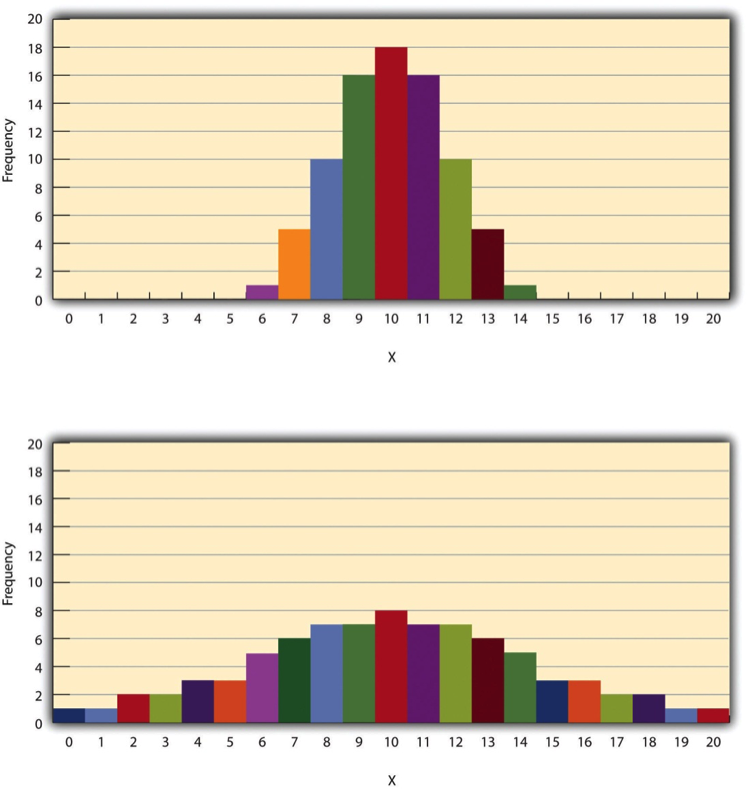

When the distribution of a quantitative variable is displayed in a histogram, it has a shape. The shape of the distribution of self-esteem scores in Figure 14.2 is typical. There is a peak somewhere near the middle of the distribution and “tails” that taper in either direction from the peak. The distribution of Figure 14.2 is unimodal, meaning it has one distinct peak, but distributions can also be bimodal, as in Figure 14.3, meaning they have two distinct peaks. Figure 14.3, for example, shows a hypothetical bimodal distribution of scores on the Beck Depression Inventory. I know we talked about the mode mostly for nominal or ordinal variables, but you can actually use histograms to look at the distribution of interval/ratio variables, too, and still have a unimodal or bimodal distribution even if you aren’t calculating a mode. Distributions can also have more than two distinct peaks, but these are relatively rare in social work research.

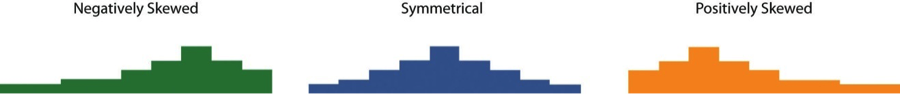

Another characteristic of the shape of a distribution is whether it is symmetrical or skewed. The distribution in the center of Figure 14.4 is symmetrical. Its left and right halves are mirror images of each other. The distribution on the left is negatively skewed, with its peak shifted toward the upper end of its range and a relatively long negative tail. The distribution on the right is positively skewed, with its peak toward the lower end of its range and a relatively long positive tail.

Range: A simple measure of variability

The variability of a distribution is the extent to which the scores vary around their central tendency. Consider the two distributions in Figure 14.5, both of which have the same central tendency. The mean, median, and mode of each distribution are 10. Notice, however, that the two distributions differ in terms of their variability. The top one has relatively low variability, with all the scores relatively close to the center. The bottom one has relatively high variability, with the scores are spread across a much greater range.

One simple measure of variability is the range, which is simply the difference between the highest and lowest scores in the distribution. The range of the self-esteem scores in Table 12.1, for example, is the difference between the highest score (24) and the lowest score (15). That is, the range is 24 − 15 = 9. Although the range is easy to compute and understand, it can be misleading when there are outliers. Imagine, for example, an exam on which all the students scored between 90 and 100. It has a range of 10. But if there was a single student who scored 20, the range would increase to 80—giving the impression that the scores were quite variable when in fact only one student differed substantially from the rest.

Key Takeaways

- Descriptive statistics are a way to summarize and display data, and are essential to understand and report your data.

- A frequency table is useful for nominal and ordinal variables and is needed to produce a histogram

- A histogram is a graphic representation of your data that shows how many cases fall into each level of your variable.

- Variability is important to understand in analyzing your data because studying a phenomenon that does not vary for your population does not provide a lot of information.

Exercises

- Think about the dependent variable in your project. What would you do if you analyzed its variability for people of different genders, and there was very little variability?

- What do you think it would mean if the distribution of the variable were bimodal?

Chapter Outline

- Ethical and social justice considerations in measurement

- Post-positivism: Assumptions of quantitative methods

- Researcher positionality

- Assessing measurement quality and fighting oppression

Content warning: TBD.

12.1 Ethical and social justice considerations in measurement

Learning Objectives

Learners will be able to...

- Identify potential cultural, ethical, and social justice issues in measurement.

With your variables operationalized, it's time to take a step back and look at how measurement in social science impact our daily lives. As we will see, how we measure things is both shaped by power arrangements inside our society, and more insidiously, by establishing what is scientifically true, measures have their own power to influence the world. Just like reification in the conceptual world, how we operationally define concepts can reinforce or fight against oppressive forces.

Data equity

How we decide to measure our variables determines what kind of data we end up with in our research project. Because scientific processes are a part of our sociocultural context, the same biases and oppressions we see in the real world can be manifested or even magnified in research data. Jagadish and colleagues (2021)[1] presents four dimensions of data equity that are relevant to consider: in representation of non-dominant groups within data sets; in how data is collected, analyzed, and combined across datasets; in equitable and participatory access to data, and finally in the outcomes associated with the data collection. Historically, we have mostly focused on the outcomes of measures producing outcomes that are biased in one way or another, and this section reviews many such examples. However, it is important to note that equity must also come from designing measures that respond to questions like:

- Are groups historically suppressed from the data record represented in the sample?

- Are equity data gathered by researchers and used to uncover and quantify inequity?

- Are the data accessible across domains and levels of expertise, and can community members participate in the design, collection, and analysis of the public data record?

- Are the data collected used to monitor and mitigate inequitable impacts?

So, it's not just about whether measures work for one population for another. Data equity is about the context in which data are created from how we measure people and things. We agree with these authors that data equity should be considered within the context of automated decision-making systems and recognizing a broader literature around the role of administrative systems in creating and reinforcing discrimination. To combat the inequitable processes and outcomes we describe below, researchers must foreground equity as a core component of measurement.

Flawed measures & missing measures

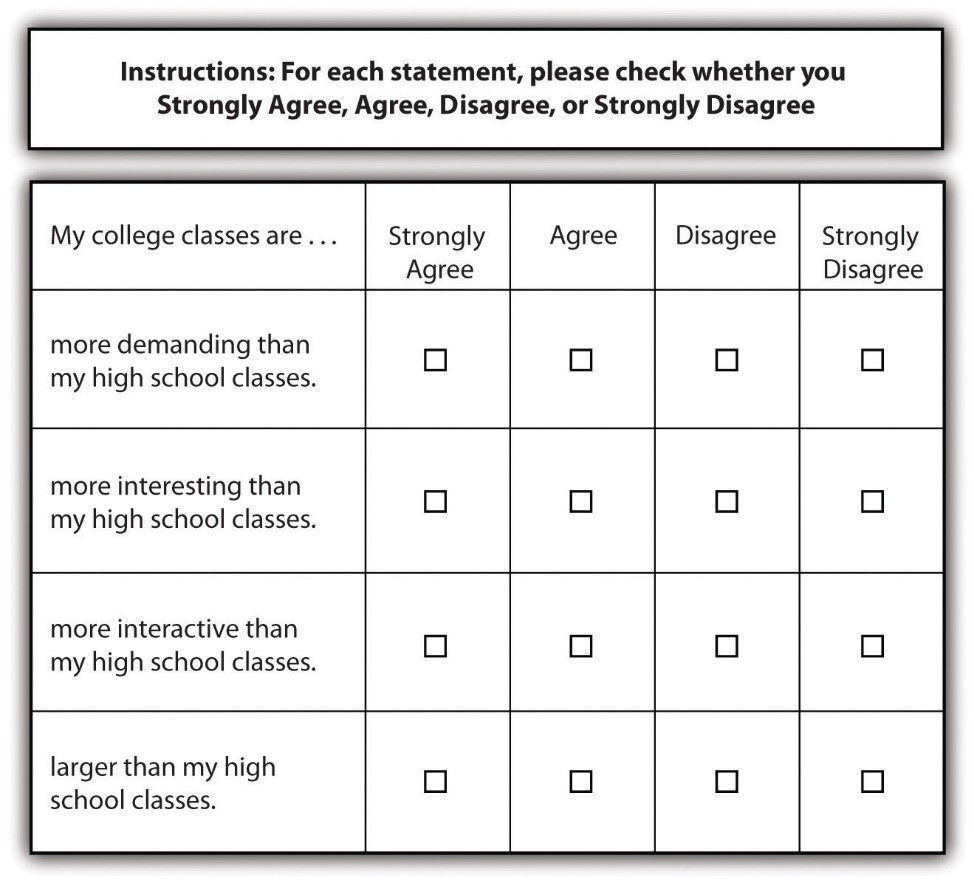

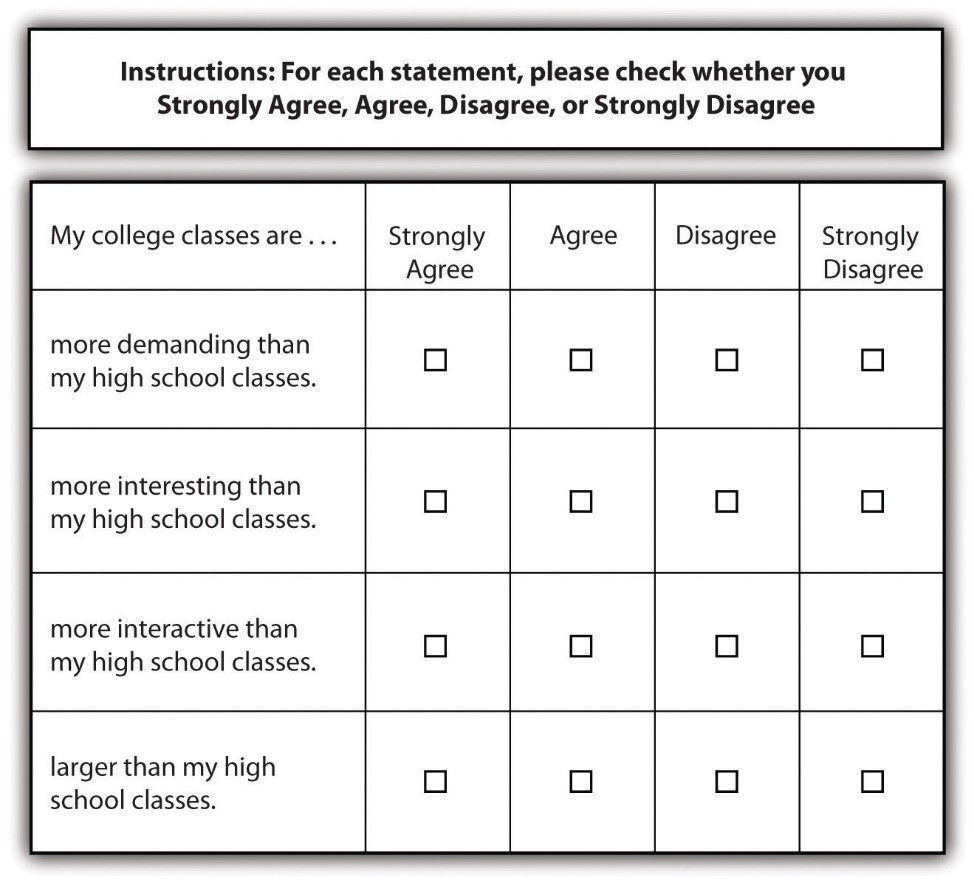

At the end of every semester, students in just about every university classroom in the United States complete similar student evaluations of teaching (SETs). Since every student is likely familiar with these, we can recognize many of the concepts we discussed in the previous sections. There are number of rating scale questions that ask you to rate the professor, class, and teaching effectiveness on a scale of 1-5. Scores are averaged across students and used to determine the quality of teaching delivered by the faculty member. SETs scores are often a principle component of how faculty are reappointed to teaching positions. Would it surprise you to learn that student evaluations of teaching are of questionable quality? If your instructors are assessed with a biased or incomplete measure, how might that impact your education?

Most often, student scores are averaged across questions and reported as a final average. This average is used as one factor, often the most important factor, in a faculty member's reappointment to teaching roles. We learned in this chapter that rating scales are ordinal, not interval or ratio, and the data are categories not numbers. Although rating scales use a familiar 1-5 scale, the numbers 1, 2, 3, 4, & 5 are really just helpful labels for categories like "excellent" or "strongly agree." If we relabeled these categories as letters (A-E) rather than as numbers (1-5), how would you average them?

Averaging ordinal data is methodologically dubious, as the numbers are merely a useful convention. As you will learn in Chapter 14, taking the median value is what makes the most sense with ordinal data. Median values are also less sensitive to outliers. So, a single student who has strong negative or positive feelings towards the professor could bias the class's SETs scores higher or lower than what the "average" student in the class would say, particularly for classes with few students or in which fewer students completed evaluations of their teachers.

We care about teaching quality because more effective teachers will produce more knowledgeable and capable students. However, student evaluations of teaching are not particularly good indicators of teaching quality and are not associated with the independently measured learning gains of students (i.e., test scores, final grades) (Uttl et al., 2017).[2] This speaks to the lack of criterion validity. Higher teaching quality should be associated with better learning outcomes for students, but across multiple studies stretching back years, there is no association that cannot be better explained by other factors. To be fair, there are scholars who find that SETs are valid and reliable. For a thorough defense of SETs as well as a historical summary of the literature see Benton & Cashin (2012).[3]

Even though student evaluations of teaching often contain dozens of questions, researchers often find that the questions are so highly interrelated that one concept (or factor, as it is called in a factor analysis) explains a large portion of the variance in teachers' scores on student evaluations (Clayson, 2018).[4] Personally, I believe based on completing SETs myself that factor is probably best conceptualized as student satisfaction, which is obviously worthwhile to measure, but is conceptually quite different from teaching effectiveness or whether a course achieved its intended outcomes. The lack of a clear operational and conceptual definition for the variable or variables being measured in student evaluations of teaching also speaks to a lack of content validity. Researchers check content validity by comparing the measurement method with the conceptual definition, but without a clear conceptual definition of the concept measured by student evaluations of teaching, it's not clear how we can know our measure is valid. Indeed, the lack of clarity around what is being measured in teaching evaluations impairs students' ability to provide reliable and valid evaluations. So, while many researchers argue that the class average SETs scores are reliable in that they are consistent over time and across classes, it is unclear what exactly is being measured even if it is consistent (Clayson, 2018).[5]

As a faculty member, there are a number of things I can do to influence my evaluations and disrupt validity and reliability. Since SETs scores are associated with the grades students perceive they will receive (e.g., Boring et al., 2016),[6] guaranteeing everyone a final grade of A in my class will likely increase my SETs scores and my chances at tenure and promotion. I could time an email reminder to complete SETs with releasing high grades for a major assignment to boost my evaluation scores. On the other hand, student evaluations might be coincidentally timed with poor grades or difficult assignments that will bias student evaluations downward. Students may also infer I am manipulating them and give me lower SET scores as a result. To maximize my SET scores and chances and promotion, I also need to select which courses I teach carefully. Classes that are more quantitatively oriented generally receive lower ratings than more qualitative and humanities-driven classes, which makes my decision to teach social work research a poor strategy (Uttl & Smibert, 2017).[7] The only manipulative strategy I will admit to using is bringing food (usually cookies or donuts) to class during the period in which students are completing evaluations. Measurement is impacted by context.

As a white cis-gender male educator, I am adversely impacted by SETs because of their sketchy validity, reliability, and methodology. The other flaws with student evaluations actually help me while disadvantaging teachers from oppressed groups. Heffernan (2021)[8] provides a comprehensive overview of the sexism, racism, ableism, and prejudice baked into student evaluations:

"In all studies relating to gender, the analyses indicate that the highest scores are awarded in subjects filled with young, white, male students being taught by white English first language speaking, able-bodied, male academics who are neither too young nor too old (approx. 35–50 years of age), and who the students believe are heterosexual. Most deviations from this scenario in terms of student and academic demographics equates to lower SET scores. These studies thus highlight that white, able-bodied, heterosexual, men of a certain age are not only the least affected, they benefit from the practice. When every demographic group who does not fit this image is significantly disadvantaged by SETs, these processes serve to further enhance the position of the already privileged" (p. 5).

The staggering consistency of studies examining prejudice in SETs has led to some rather superficial reforms like reminding students to not submit racist or sexist responses in the written instructions given before SETs. Yet, even though we know that SETs are systematically biased against women, people of color, and people with disabilities, the overwhelming majority of universities in the United States continue to use them to evaluate faculty for promotion or reappointment. From a critical perspective, it is worth considering why university administrators continue to use such a biased and flawed instrument. SETs produce data that make it easy to compare faculty to one another and track faculty members over time. Furthermore, they offer students a direct opportunity to voice their concerns and highlight what went well.

As the people with the greatest knowledge about what happened in the classroom as whether it met their expectations, providing students with open-ended questions is the most productive part of SETs. Personally, I have found focus groups written, facilitated, and analyzed by student researchers to be more insightful than SETs. MSW student activists and leaders may look for ways to evaluate faculty that are more methodologically sound and less systematically biased, creating institutional change by replacing or augmenting traditional SETs in their department. There is very rarely student input on the criteria and methodology for teaching evaluations, yet students are the most impacted by helpful or harmful teaching practices.

Students should fight for better assessment in the classroom because well-designed assessments provide documentation to support more effective teaching practices and discourage unhelpful or discriminatory practices. Flawed assessments like SETs, can lead to a lack of information about problems with courses, instructors, or other aspects of the program. Think critically about what data your program uses to gauge its effectiveness. How might you introduce areas of student concern into how your program evaluates itself? Are there issues with food or housing insecurity, mentorship of nontraditional and first generation students, or other issues that faculty should consider when they evaluate their program? Finally, as you transition into practice, think about how your agency measures its impact and how it privileges or excludes client and community voices in the assessment process.

Let's consider an example from social work practice. Let's say you work for a mental health organization that serves youth impacted by community violence. How should you measure the impact of your services on your clients and their community? Schools may be interested in reducing truancy, self-injury, or other behavioral concerns. However, by centering delinquent behaviors in how we measure our impact, we may be inattentive to the role of trauma, family dynamics, and other cognitive and social processes beyond "delinquent behavior." Indeed, we may bias our interventions by focusing on things that are not as important to clients' needs. Social workers want to make sure their programs are improving over time, and we rely on our measures to indicate what to change and what to keep. If our measures present a partial or flawed view, we lose our ability to establish and act on scientific truths.

While writing this section, one of the authors wrote this commentary article addressing potential racial bias in social work licensing exams. If you are interested in an example of missing or flawed measures that relates to systems your social work practice is governed by (rather than SETs which govern our practice in higher education) check it out!

You may also be interested in similar arguments against the standard grading scale (A-F), and why grades (numerical, letter, etc.) do not do a good job of measuring learning. Think critically about the role that grades play in your life as a student, your self-concept, and your relationships with teachers. Your test and grade anxiety is due in part to how your learning is measured. Those measurements end up becoming an official record of your scholarship and allow employers or funders to compare you to other scholars. The stakes for measurement are the same for participants in your research study.

Self-reflection and measurement

Student evaluations of teaching are just like any other measure. How we decide to measure what we are researching is influenced by our backgrounds, including our culture, implicit biases, and individual experiences. For me as a middle-class, cisgender white woman, the decisions I make about measurement will probably default to ones that make the most sense to me and others like me, and thus measure characteristics about us most accurately if I don't think carefully about it. There are major implications for research here because this could affect the validity of my measurements for other populations.

This doesn't mean that standardized scales or indices, for instance, won't work for diverse groups of people. What it means is that researchers must not ignore difference in deciding how to measure a variable in their research. Doing so may serve to push already marginalized people further into the margins of academic research and, consequently, social work intervention. Social work researchers, with our strong orientation toward celebrating difference and working for social justice, are obligated to keep this in mind for ourselves and encourage others to think about it in their research, too.

This involves reflecting on what we are measuring, how we are measuring, and why we are measuring. Do we have biases that impacted how we operationalized our concepts? Did we include stakeholders and gatekeepers in the development of our concepts? This can be a way to gain access to vulnerable populations. What feedback did we receive on our measurement process and how was it incorporated into our work? These are all questions we should ask as we are thinking about measurement. Further, engaging in this intentionally reflective process will help us maximize the chances that our measurement will be accurate and as free from bias as possible.

The NASW Code of Ethics discusses social work research and the importance of engaging in practices that do not harm participants. This is especially important considering that many of the topics studied by social workers are those that are disproportionately experienced by marginalized and oppressed populations. Some of these populations have had negative experiences with the research process: historically, their stories have been viewed through lenses that reinforced the dominant culture's standpoint. Thus, when thinking about measurement in research projects, we must remember that the way in which concepts or constructs are measured will impact how marginalized or oppressed persons are viewed. It is important that social work researchers examine current tools to ensure appropriateness for their population(s). Sometimes this may require researchers to use existing tools. Other times, this may require researchers to adapt existing measures or develop completely new measures in collaboration with community stakeholders. In summary, the measurement protocols selected should be tailored and attentive to the experiences of the communities to be studied.

Unfortunately, social science researchers do not do a great job of sharing their measures in a way that allows social work practitioners and administrators to use them to evaluate the impact of interventions and programs on clients. Few scales are published under an open copyright license that allows other people to view it for free and share it with others. Instead, the best way to find a scale mentioned in an article is often to simply search for it in Google with ".pdf" or ".docx" in the query to see if someone posted a copy online (usually in violation of copyright law). As we discussed in Chapter 4, this is an issue of information privilege, or the structuring impact of oppression and discrimination on groups' access to and use of scholarly information. As a student at a university with a research library, you can access the Mental Measurement Yearbook to look up scales and indexes that measure client or program outcomes while researchers unaffiliated with university libraries cannot do so. Similarly, the vast majority of scholarship in social work and allied disciplines does not share measures, data, or other research materials openly, a best practice in open and collaborative science. In many cases, the public paid for these research materials as part of grants; yet the projects close off access to much of the study information. It is important to underscore these structural barriers to using valid and reliable scales in social work practice. An invalid or unreliable outcome test may cause ineffective or harmful programs to persist or may worsen existing prejudices and oppressions experienced by clients, communities, and practitioners.

But it's not just about reflecting and identifying problems and biases in our measurement, operationalization, and conceptualization—what are we going to do about it? Consider this as you move through this book and become a more critical consumer of research. Sometimes there isn't something you can do in the immediate sense—the literature base at this moment just is what it is. But how does that inform what you will do later?

A place to start: Stop oversimplifying race

We will address many more of the critical issues related to measurement in the next chapter. One way to get started in bringing cultural awareness to scientific measurement is through a critical examination of how we analyze race quantitatively. There are many important methodological objections to how we measure the impact of race. We encourage you to watch Dr. Abigail Sewell's three-part workshop series called "Nested Models for Critical Studies of Race & Racism" for the Inter-university Consortium for Political and Social Research (ICPSR). She discusses how to operationalize and measure inequality, racism, and intersectionality and critiques researchers' attempts to oversimplify or overlook racism when we measure concepts in social science. If you are interested in developing your social work research skills further, consider applying for financial support from your university to attend an ICPSR summer seminar like Dr. Sewell's where you can receive more advanced and specialized training in using research for social change.

- Part 1: Creating Measures of Supraindividual Racism (2-hour video)

- Part 2: Evaluating Population Risks of Supraindividual Racism (2-hour video)

- Part 3: Quantifying Intersectionality (2-hour video)

Key Takeaways

- Social work researchers must be attentive to personal and institutional biases in the measurement process that affect marginalized groups.

- What is measured and how it is measured is shaped by power, and social workers must be critical and self-reflective in their research projects.

Exercises

Think about your current research question and the tool(s) that you see researchers use to gather data.

- How does their positionality and experience shape what variables they are choosing to measure and how they measure concepts?

- Evaluate the measures in your study for potential biases.

- If you are using measures developed by another researcher to inform your ideas, investigate whether the measure is valid and reliable in other studies across cultures.

10.2 Post-positivism: The assumptions of quantitative methods

Learning Objectives

Learners will be able to...

- Ground your research project and working question in the philosophical assumptions of social science

- Define the terms 'ontology' and 'epistemology' and explain how they relate to quantitative and qualitative research methods

- Apply feminist, anti-racist, and decolonization critiques of social science to your project

- Define axiology and describe the axiological assumptions of research projects

What are your assumptions?

Social workers must understand measurement theory to engage in social justice work. That's because measurement theory and its supporting philosophical assumptions will help sharpen your perceptions of the social world. They help social workers build heuristics that can help identify the fundamental assumptions at the heart of social conflict and social problems. They alert you to the patterns in the underlying assumptions that different people make and how those assumptions shape their worldview, what they view as true, and what they hope to accomplish. In the next section, we will review feminist and other critical perspectives on research, and they should help inform you of how assumptions about research can reinforce oppression.

Understanding these deeper structures behind research evidence is a true gift of social work research. Because we acknowledge the usefulness and truth value of multiple philosophies and worldviews contained in this chapter, we can arrive at a deeper and more nuanced understanding of the social world.

Building your ice float

Before we can dive into philosophy, we need to recall out conversation from Chapter 1 about objective truth and subjective truths. Let's test your knowledge with a quick example. Is crime on the rise in the United States? A recent Five Thirty Eight article highlights the disparity between historical trends on crime that are at or near their lowest in the thirty years with broad perceptions by the public that crime is on the rise (Koerth & Thomson-DeVeaux, 2020).[9] Social workers skilled at research can marshal objective truth through statistics, much like the authors do, to demonstrate that people's perceptions are not based on a rational interpretation of the world. Of course, that is not where our work ends. Subjective truths might decenter this narrative of ever-increasing crime, deconstruct its racist and oppressive origins, or simply document how that narrative shapes how individuals and communities conceptualize their world.

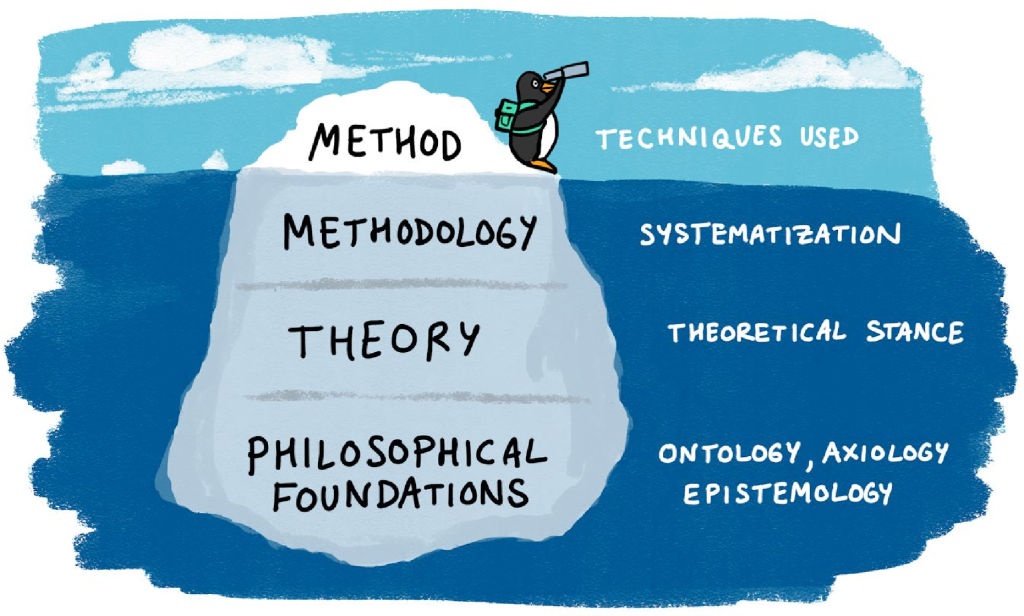

Objective does not mean right, and subjective does not mean wrong. Researchers must understand what kind of truth they are searching for so they can choose a theoretical framework, methodology, and research question that matches. As we discussed in Chapter 1, researchers seeking objective truth (one of the philosophical foundations at the bottom of Figure 7.1) often employ quantitative methods (one of the methods at the top of Figure 7.1). Similarly, researchers seeking subjective truths (again, at the bottom of Figure 7.1) often employ qualitative methods (at the top of Figure 7.1). This chapter is about the connective tissue, and by the time you are done reading, you should have a first draft of a theoretical and philosophical (a.k.a. paradigmatic) framework for your study.

Ontology: Assumptions about what is real & true

In section 1.2, we reviewed the two types of truth that social work researchers seek—objective truth and subjective truths —and linked these with the methods—quantitative and qualitative—that researchers use to study the world. If those ideas aren’t fresh in your mind, you may want to navigate back to that section for an introduction.

These two types of truth rely on different assumptions about what is real in the social world—i.e., they have a different ontology. Ontology refers to the study of being (literally, it means “rational discourse about being”). In philosophy, basic questions about existence are typically posed as ontological, e.g.:

- What is there?

- What types of things are there?

- How can we describe existence?

- What kind of categories can things go into?

- Are the categories of existence hierarchical?

Objective vs. subjective ontologies

At first, it may seem silly to question whether the phenomena we encounter in the social world are real. Of course you exist, your thoughts exist, your computer exists, and your friends exist. You can see them with your eyes. This is the ontological framework of realism, which simply means that the concepts we talk about in science exist independent of observation (Burrell & Morgan, 1979).[10] Obviously, when we close our eyes, the universe does not disappear. You may be familiar with the philosophical conundrum: "If a tree falls in a forest and no one is around to hear it, does it make a sound?"

The natural sciences, like physics and biology, also generally rely on the assumption of realism. Lone trees falling make a sound. We assume that gravity and the rest of physics are there, even when no one is there to observe them. Mitochondria are easy to spot with a powerful microscope, and we can observe and theorize about their function in a cell. The gravitational force is invisible, but clearly apparent from observable facts, such as watching an apple fall from a tree. Of course, out theories about gravity have changed over the years. Improvements were made when observations could not be correctly explained using existing theories and new theories emerged that provided a better explanation of the data.

As we discussed in section 1.2, culture-bound syndromes are an excellent example of where you might come to question realism. Of course, from a Western perspective as researchers in the United States, we think that the Diagnostic and Statistical Manual (DSM) classification of mental health disorders is real and that these culture-bound syndromes are aberrations from the norm. But what about if you were a person from Korea experiencing Hwabyeong? Wouldn't you consider the Western diagnosis of somatization disorder to be incorrect or incomplete? This conflict raises the question–do either Hwabyeong or DSM diagnoses like post-traumatic stress disorder (PTSD) really exist at all...or are they just social constructs that only exist in our minds?

If your answer is “no, they do not exist,” you are adopting the ontology of anti-realism (or relativism), or the idea that social concepts do not exist outside of human thought. Unlike the realists who seek a single, universal truth, the anti-realists perceive a sea of truths, created and shared within a social and cultural context. Unlike objective truth, which is true for all, subjective truths will vary based on who you are observing and the context in which you are observing them. The beliefs, opinions, and preferences of people are actually truths that social scientists measure and describe. Additionally, subjective truths do not exist independent of human observation because they are the product of the human mind. We negotiate what is true in the social world through language, arriving at a consensus and engaging in debate within our socio-cultural context.

These theoretical assumptions should sound familiar if you've studied social constructivism or symbolic interactionism in your other MSW courses, most likely in human behavior in the social environment (HBSE).[11] From an anti-realist perspective, what distinguishes the social sciences from natural sciences is human thought. When we try to conceptualize trauma from an anti-realist perspective, we must pay attention to the feelings, opinions, and stories in people's minds. In their most radical formulations, anti-realists propose that these feelings and stories are all that truly exist.

What happens when a situation is incorrectly interpreted? Certainly, who is correct about what is a bit subjective. It depends on who you ask. Even if you can determine whether a person is actually incorrect, they think they are right. Thus, what may not be objectively true for everyone is nevertheless true to the individual interpreting the situation. Furthermore, they act on the assumption that they are right. We all do. Much of our behaviors and interactions are a manifestation of our personal subjective truth. In this sense, even incorrect interpretations are truths, even though they are true only to one person or a group of misinformed people. This leads us to question whether the social concepts we think about really exist. For researchers using subjective ontologies, they might only exist in our minds; whereas, researchers who use objective ontologies which assume these concepts exist independent of thought.

How do we resolve this dichotomy? As social workers, we know that often times what appears to be an either/or situation is actually a both/and situation. Let's take the example of trauma. There is clearly an objective thing called trauma. We can draw out objective facts about trauma and how it interacts with other concepts in the social world such as family relationships and mental health. However, that understanding is always bound within a specific cultural and historical context. Moreover, each person's individual experience and conceptualization of trauma is also true. Much like a client who tells you their truth through their stories and reflections, when a participant in a research study tells you what their trauma means to them, it is real even though only they experience and know it that way. By using both objective and subjective analytic lenses, we can explore different aspects of trauma—what it means to everyone, always, everywhere, and what is means to one person or group of people, in a specific place and time.

Epistemology: Assumptions about how we know things

Having discussed what is true, we can proceed to the next natural question—how can we come to know what is real and true? This is epistemology. Epistemology is derived from the Ancient Greek epistēmē which refers to systematic or reliable knowledge (as opposed to doxa, or “belief”). Basically, it means “rational discourse about knowledge,” and the focus is the study of knowledge and methods used to generate knowledge. Epistemology has a history as long as philosophy, and lies at the foundation of both scientific and philosophical knowledge.

Epistemological questions include:

- What is knowledge?

- How can we claim to know anything at all?

- What does it mean to know something?

- What makes a belief justified?

- What is the relationship between the knower and what can be known?

While these philosophical questions can seem far removed from real-world interaction, thinking about these kinds of questions in the context of research helps you target your inquiry by informing your methods and helping you revise your working question. Epistemology is closely connected to method as they are both concerned with how to create and validate knowledge. Research methods are essentially epistemologies – by following a certain process we support our claim to know about the things we have been researching. Inappropriate or poorly followed methods can undermine claims to have produced new knowledge or discovered a new truth. This can have implications for future studies that build on the data and/or conceptual framework used.

Research methods can be thought of as essentially stripped down, purpose-specific epistemologies. The knowledge claims that underlie the results of surveys, focus groups, and other common research designs ultimately rest on epistemological assumptions of their methods. Focus groups and other qualitative methods usually rely on subjective epistemological (and ontological) assumptions. Surveys and and other quantitative methods usually rely on objective epistemological assumptions. These epistemological assumptions often entail congruent subjective or objective ontological assumptions about the ultimate questions about reality.

Objective vs. subjective epistemologies

One key consideration here is the status of ‘truth’ within a particular epistemology or research method. If, for instance, some approaches emphasize subjective knowledge and deny the possibility of an objective truth, what does this mean for choosing a research method?

We began to answer this question in Chapter 1 when we described the scientific method and objective and subjective truths. Epistemological subjectivism focuses on what people think and feel about a situation, while epistemological objectivism focuses on objective facts irrelevant to our interpretation of a situation (Lin, 2015).[12]

While there are many important questions about epistemology to ask (e.g., "How can I be sure of what I know?" or "What can I not know?" see Willis, 2007[13] for more), from a pragmatic perspective most relevant epistemological question in the social sciences is whether truth is better accessed using numerical data or words and performances. Generally, scientists approaching research with an objective epistemology (and realist ontology) will use quantitative methods to arrive at scientific truth. Quantitative methods examine numerical data to precisely describe and predict elements of the social world. For example, while people can have different definitions for poverty, an objective measurement such as an annual income of "less than $25,100 for a family of four" provides a precise measurement that can be compared to incomes from all other people in any society from any time period, and refers to real quantities of money that exist in the world. Mathematical relationships are uniquely useful in that they allow comparisons across individuals as well as time and space. In this book, we will review the most common designs used in quantitative research: surveys and experiments. These types of studies usually rely on the epistemological assumption that mathematics can represent the phenomena and relationships we observe in the social world.

Although mathematical relationships are useful, they are limited in what they can tell you. While you can learn use quantitative methods to measure individuals' experiences and thought processes, you will miss the story behind the numbers. To analyze stories scientifically, we need to examine their expression in interviews, journal entries, performances, and other cultural artifacts using qualitative methods. Because social science studies human interaction and the reality we all create and share in our heads, subjectivists focus on language and other ways we communicate our inner experience. Qualitative methods allow us to scientifically investigate language and other forms of expression—to pursue research questions that explore the words people write and speak. This is consistent with epistemological subjectivism's focus on individual and shared experiences, interpretations, and stories.

It is important to note that qualitative methods are entirely compatible with seeking objective truth. Approaching qualitative analysis with a more objective perspective, we look simply at what was said and examine its surface-level meaning. If a person says they brought their kids to school that day, then that is what is true. A researcher seeking subjective truth may focus on how the person says the words—their tone of voice, facial expressions, metaphors, and so forth. By focusing on these things, the researcher can understand what it meant to the person to say they dropped their kids off at school. Perhaps in describing dropping their children off at school, the person thought of their parents doing the same thing or tried to understand why their kid didn't wave back to them as they left the car. In this way, subjective truths are deeper, more personalized, and difficult to generalize.

Self-determination and free will

When scientists observe social phenomena, they often take the perspective of determinism, meaning that what is seen is the result of processes that occurred earlier in time (i.e., cause and effect). This process is represented in the classical formulation of a research question which asks "what is the relationship between X (cause) and Y (effect)?" By framing a research question in such a way, the scientist is disregarding any reciprocal influence that Y has on X. Moreover, the scientist also excludes human agency from the equation. It is simply that a cause will necessitate an effect. For example, a researcher might find that few people living in neighborhoods with higher rates of poverty graduate from high school, and thus conclude that poverty causes adolescents to drop out of school. This conclusion, however, does not address the story behind the numbers. Each person who is counted as graduating or dropping out has a unique story of why they made the choices they did. Perhaps they had a mentor or parent that helped them succeed. Perhaps they faced the choice between employment to support family members or continuing in school.

For this reason, determinism is critiqued as reductionistic in the social sciences because people have agency over their actions. This is unlike the natural sciences like physics. While a table isn't aware of the friction it has with the floor, parents and children are likely aware of the friction in their relationships and act based on how they interpret that conflict. The opposite of determinism is free will, that humans can choose how they act and their behavior and thoughts are not solely determined by what happened prior in a neat, cause-and-effect relationship. Researchers adopting a perspective of free will view the process of, continuing with our education example, seeking higher education as the result of a number of mutually influencing forces and the spontaneous and implicit processes of human thought. For these researchers, the picture painted by determinism is too simplistic.

A similar dichotomy can be found in the debate between individualism and holism. When you hear something like "the disease model of addiction leads to policies that pathologize and oppress people who use drugs," the speaker is making a methodologically holistic argument. They are making a claim that abstract social forces (the disease model, policies) can cause things to change. A methodological individualist would critique this argument by saying that the disease model of addiction doesn't actually cause anything by itself. From this perspective, it is the individuals, rather than any abstract social force, who oppress people who use drugs. The disease model itself doesn't cause anything to change; the individuals who follow the precepts of the disease model are the agents who actually oppress people in reality. To an individualist, all social phenomena are the result of individual human action and agency. To a holist, social forces can determine outcomes for individuals without individuals playing a causal role, undercutting free will and research projects that seek to maximize human agency.

Exercises

- Examine an article from your literature review

- Is human action, or free will, informing how the authors think about the people in their study?

- Or are humans more passive and what happens to them more determined by the social forces that influence their life?

- Reflect on how this project's assumptions may differ from your own assumptions about free will and determinism. For example, my beliefs about self-determination and free will always inform my social work practice. However, my working question and research project may rely on social theories that are deterministic and do not address human agency.

Radical change

Another assumption scientists make is around the nature of the social world. Is it an orderly place that remains relatively stable over time? Or is it a place of constant change and conflict? The view of the social world as an orderly place can help a researcher describe how things fit together to create a cohesive whole. For example, systems theory can help you understand how different systems interact with and influence one another, drawing energy from one place to another through an interconnected network with a tendency towards homeostasis. This is a more consensus-focused and status-quo-oriented perspective. Yet, this view of the social world cannot adequately explain the radical shifts and revolutions that occur. It also leaves little room for human action and free will. In this more radical space, change consists of the fundamental assumptions about how the social world works.

For example, at the time of this writing, protests are taking place across the world to remember the killing of George Floyd by Minneapolis police and other victims of police violence and systematic racism. Public support of Black Lives Matter, an anti-racist activist group that focuses on police violence and criminal justice reform, has experienced a radical shift in public support in just two weeks since the killing, equivalent to the previous 21 months of advocacy and social movement organizing (Cohn & Quealy, 2020).[14] Abolition of police and prisons, once a fringe idea, has moved into the conversation about remaking the criminal justice system from the ground-up, centering its historic and current role as an oppressive system for Black Americans. Seemingly overnight, reducing the money spent on police and giving that money to social services became a moderate political position.

A researcher centering change may choose to understand this transformation or even incorporate radical anti-racist ideas into the design and methods of their study. For an example of how to do so, see this participatory action research study working with Black and Latino youth (Bautista et al., 2013).[15] Contrastingly, a researcher centering consensus and the status quo might focus on incremental changes what people currently think about the topic. For example, see this survey of social work student attitudes on poverty and race that seeks to understand the status quo of student attitudes and suggest small changes that might change things for the better (Constance-Huggins et al., 2020).[16] To be clear, both studies contribute to racial justice. However, you can see by examining the methods section of each article how the participatory action research article addresses power and values as a core part of their research design, qualitative ethnography and deep observation over many years, in ways that privilege the voice of people with the least power. In this way, it seeks to rectify the epistemic injustice of excluding and oversimplifying Black and Latino youth. Contrast this more radical approach with the more traditional approach taken in the second article, in which they measured student attitudes using a survey developed by researchers.

Exercises

- Examine an article from your literature review

- Traditional studies will be less participatory. The researcher will determine the research question, how to measure it, data collection, etc.

- Radical studies will be more participatory. The researcher seek to undermine power imbalances at each stage of the research process.

- Pragmatically, more participatory studies take longer to complete and are less suited to projects that need to be completed in a short time frame.

Axiology: Assumptions about values

Axiology is the study of values and value judgements (literally “rational discourse about values [a xía]”). In philosophy this field is subdivided into ethics (the study of morality) and aesthetics (the study of beauty, taste and judgement). For the hard-nosed scientist, the relevance of axiology might not be obvious. After all, what difference do one’s feelings make for the data collected? Don’t we spend a long time trying to teach researchers to be objective and remove their values from the scientific method?

Like ontology and epistemology, the import of axiology is typically built into research projects and exists “below the surface”. You might not consciously engage with values in a research project, but they are still there. Similarly, you might not hear many researchers refer to their axiological commitments but they might well talk about their values and ethics, their positionality, or a commitment to social justice.

Our values focus and motivate our research. These values could include a commitment to scientific rigor, or to always act ethically as a researcher. At a more general level we might ask: What matters? Why do research at all? How does it contribute to human wellbeing? Almost all research projects are grounded in trying to answer a question that matters or has consequences. Some research projects are even explicit in their intention to improve things rather than observe them. This is most closely associated with “critical” approaches.

Critical and radical views of science focus on how to spread knowledge and information in a way that combats oppression. These questions are central for creating research projects that fight against the objective structures of oppression—like unequal pay—and their subjective counterparts in the mind—like internalized sexism. For example, a more critical research project would fight not only against statutes of limitations for sexual assault but on how women have internalized rape culture as well. Its explicit goal would be to fight oppression and to inform practice on women's liberation. For this reason, creating change is baked into the research questions and methods used in more critical and radical research projects.

As part of studying radical change and oppression, we are likely employing a model of science that puts values front-and-center within a research project. All social work research is values-driven, as we are a values-driven profession. Historically, though, most social scientists have argued for values-free science. Scientists agree that science helps human progress, but they hold that researchers should remain as objective as possible—which means putting aside politics and personal values that might bias their results, similar to the cognitive biases we discussed in section 1.1. Over the course of last century, this perspective was challenged by scientists who approached research from an explicitly political and values-driven perspective. As we discussed earlier in this section, feminist critiques strive to understand how sexism biases research questions, samples, measures, and conclusions, while decolonization critiques try to de-center the Western perspective of science and truth.

Linking axiology, epistemology, and ontology

It is important to note that both values-central and values-neutral perspectives are useful in furthering social justice. Values-neutral science is helpful at predicting phenomena. Indeed, it matches well with objectivist ontologies and epistemologies. Let's examine a measure of depression, the Patient Health Questionnaire (PSQ-9). The authors of this measure spent years creating a measure that accurately and reliably measures the concept of depression. This measure is assumed to measure depression in any person, and scales like this are often translated into other languages (and subsequently validated) for more widespread use . The goal is to measure depression in a valid and reliable manner. We can use this objective measure to predict relationships with other risk and protective factors, such as substance use or poverty, as well as evaluate the impact of evidence-based treatments for depression like narrative therapy.