10 Connecting conceptualization and measurement

Chapter Outline

- Measurement modeling (18-minute read)

- Construct validity (25-minute read)

- Post-positivism: The assumptions of quantitative methods (30-minute read)

Content warning: TBD.

10.1 Measurement modeling

Learning Objectives

Learners will be able to…

- Define measurement modeling and how it relates to conceptual and operational definitions

- Describe how operational definitions are impacted by power.

- Critique the validity and reliability of measurement models

With your variables operationalized, it’s time to take a step back and look at the assumptions underlying operational definitions and conceptual definitions in your project and how they rely upon broader assumptions underlying quantitative research methods. By making your assumptions explicit, you can ensure your conceptual definitions and operational definitions coalesce into a coherent measurement model. This chapter adapts the seminal work “Measurement and Fairness” by Jacobs & Wallach (2021) which defines measurement model is a statistical model that links unobservable theoretical constructs (i.e., conceptual definitions from Chapter 8) and observable properties a researcher measures (i.e., operational definitions from Chapter 9) within a sample or data set (Chapter 11, see section on sample quality) (Jackman, 2008).

As we discussed in Chapter 9, such constructs cannot be measured directly and must instead be inferred from measurements of observable properties. The measurement model refers to the assumptions that the indicators represent the deeper, unobservable theoretical constructs we are interested in studying. Like we mentioned in Chapter 9, if we are operationalizing the theoretical concept of masculinity, indicators for that concept might include some of the social roles or fashion choices prescribed to men in society. Of course, masculinity is a contested construct, and the extent to which indicators you measured are complete, unbiased, and reliable measurements of the theoretical construct of masculinity will impact the conclusions you can draw. Errors in measurement models are often where human subjects researchers and powerful systems who measure oppressed groups reinforce domination through social science. This chapter will help you understand the assumptions underlying the measurement models in quantitative research.

Latent variables

Left off here: https://www.ncrm.ac.uk/resources/online/all/?id=20835

I am loath to introduce another “variable” type here, as we have many (independent, dependent, control, moderating, mediating). But all of those variables, as we have operationally defined them, are distinct from their theoretical construct. For example, religiosity refers to how religious someone is. A reasonable indicator for a researcher to measure would be how often someone attends a place of worship, but this would exclude many people who consider themselves highly religious who cannot or do not care to attend services. In this simplistic measurement model, the latent variable, religiosity, is inherently unobservable (Jackman, 2008). Religiosity gets operationalized into a variable that can be measured, church attendance, and this level of abstraction adds another layer of assumptions that can introduce error and bias.

In the previous chapter, we discussed researchers use standardized scales to ensure that valid and reliable measurements of participants. Operationalizing anxiety using the GAD-7 Anxiety inventory is a good idea, but the latent variable of anxiety is distinct from the categories of clinically significant generalized anxiety from the GAD-7. But collapsing these distinctions makes it difficult to address any possible mismatches. When reading a research study, you should be able to see how the researcher’s conceptualization informed what indicators and measurements were used. Collapsing the distinction between conceptual definitions and operational definitions is when fairness-related harms are most often introduced into the scientific process. For example,

Researchers measure what they can to infer broader patterns. Necessary compromises introduce mismatches between (a) the theoretical understanding of the construct purported to be measured and (b) its operationalization. Many of the harms in automated systems like algorithmic monitoring in child welfare, gunshot listening devices, and other real-world measurements are direct results of such mismatches, in addition to the harms perpetuated by human subjects researchers. Some of these harms could have been anticipated and, in some cases, mitigated if viewed through the lens of measurement modeling. To do this, Jacobs & Wallch (2022) introduced fairness-oriented conceptualizations of construct validity and construct reliability, described below.

In addition to the validity and reliability of specific measurement, we need to take a look at how validity and reliability impact the measurement model you’ve constructed for the causal relationships in your research question. How we measure things is both shaped by power arrangements inside our society, and more insidiously, by establishing what is scientifically true, measures have their own power to influence the world. Just like reification in the conceptual world, how we operationally define concepts can reinforce or fight against oppressive forces. The measurement modeling process necessarily involves making assumptions that must be made explicit and tested before the resulting measurements are used.

Measurement error models

Consider the process of measuring a person’s socioeconomic status (SES). From a theoretical perspective, a person’s SES is understood as encompassing their social and economic position in relation to others. Unlike a person’s height, their SES is unobservable, so it cannot be measured directly and must instead be inferred from measurements of observable properties thought to be related to it, such as income, wealth, education, and occupation.

We refer to the abstraction of SES as a construct S and then operationalize S as a latent variable s. Measurement error models are the assumptions the researcher makes about the errors in their measurements. In many contexts, it is reasonable to assume that measurement error is does not impact any variable too much, not in any particular direction, and with distributions that can be understood using normal parametric statistics. However, in some contexts, the measurement error may not behave like researcher expect and may even be correlated with demographic factors, such as race or gender. Systematic error or bias often reinforces existing power relationships in society.

The simplest way to measure a person’s SES is to use an observable property—like their income—as an indicator for it. Letting the construct I represent the abstraction of income and operationalizing I as a latent variable i, this means specifying a both measurement model that links s and i and a measurement error model. For example, if we assume that s and i are linked via the identity function—i.e., that s = i—and we assume that it is possible to obtain error-free measurements of a person’s income—i.e., that ˆi = i—then s = ˆi. Like the previous example, this example highlights that the measurement modeling process necessarily involves making assumptions. Indeed, there are many other measurement models that use income as a proxy for SES but make different assumptions about the specific relationship between them.

Similarly, there are many other measurement error models that make different assumptions about the errors that occur when measuring a person’s income. For example, if we measure a person’s monthly income by totaling the wages deposited into their account over a single one-month period, then we must use a measurement error model that accounts for the possibility that the timing of the one-month period and the timings of their wage deposits may not be aligned. We would also exclude non-wage income such as government benefits or child support. Using a measurement error model that does not account for this possibility—e.g., using ˆi = i—will yield inaccurate measurements.

Human Rights Watch reported exactly this scenario in the context of the Universal Credit benefits system in the U.K. [55]: The system measured a claimant’s monthly income using a one-month rolling period that began immediately after they submitted their claim without accounting for the possibility described above. This meant that the system “might detect that an individual received a £1000 paycheck on March 30 and another £1000 on April 29, but not that each £1000 salary is a monthly wage [leading it] to compute the individual’s benefit in May based on the incorrect assumption that their combined earnings for March and April (i.e., £2000) are their monthly wage,” denying them much-needed resources. Moving beyond income as a proxy for SES, there are arbitrarily many ways to operationalize SES via a measurement model, incorporating both measurements of observable properties, such as wealth, education, and occupation, as well as measurements of other unobservable theoretical constructs, such as cultural capital.

Measuring teacher effectiveness

At the end of every semester, students in just about every university classroom in the United States complete similar student evaluations of teaching (SETs). Since every student is likely familiar with these, we can recognize many of the concepts we discussed in the previous sections. There are number of rating scale questions that ask you to rate the professor, class, and teaching effectiveness on a scale of 1-5. Scores are averaged across students and used to determine the quality of teaching delivered by the faculty member. SETs scores are often a principle component of how faculty are reappointed to teaching positions. Would it surprise you to learn that student evaluations of teaching are of questionable quality? If your instructors are assessed with a biased or incomplete measure, how might that impact your education?

Distribution of responses

Most often, student scores are averaged across questions and reported as a final average. This average is used as one factor, often the most important factor, in a faculty member’s reappointment to teaching roles. We learned in the previous chapter that rating scales are ordinal, not interval or ratio, and the data are categories not numbers. Although rating scales use a familiar 1-5 scale, the numbers 1, 2, 3, 4, & 5 are really just helpful labels for categories like “excellent” or “strongly agree.” If we relabeled these categories as letters (A-E) rather than as numbers (1-5), how would you average them?

Averaging ordinal data is methodologically dubious, as the numbers are merely a useful convention. As you will learn in Part 4, taking the median value is what makes the most sense with ordinal data. Median values are also less sensitive to outliers. So, a single student who has strong negative or positive feelings towards the professor could bias the class’s SETs scores higher or lower than what the “average” student in the class would say, particularly for classes with few students or in which fewer students completed evaluations of their teachers.

Unclear latent variable

Even though student evaluations of teaching often contain dozens of questions, researchers often find that the questions are so highly interrelated that one concept (or factor, as it is called in a factor analysis) explains a large portion of the variance in teachers’ scores on student evaluations (Clayson, 2018).[1] Personally, I believe based on completing SETs myself that factor is probably best conceptualized as student satisfaction, which is obviously worthwhile to measure, but is conceptually quite different from teaching effectiveness or whether a course achieved its intended outcomes. The lack of a clear operational and conceptual definition for the variable or variables being measured in student evaluations of teaching also speaks to a lack of content validity. Researchers check content validity by comparing the measurement method with the conceptual definition, but without a clear conceptual definition of the concept measured by student evaluations of teaching, it’s not clear how we can know our measure is valid. Indeed, the lack of clarity around what is being measured in teaching evaluations impairs students’ ability to provide reliable and valid evaluations. So, while many researchers argue that the class average SETs scores are reliable in that they are consistent over time and across classes, it is unclear what exactly is being measured even if it is consistent (Clayson, 2018).[2]

Measurement error model for SETs

We care about teaching quality because more effective teachers will produce more knowledgeable and capable students. However, student evaluations of teaching are not particularly good indicators of teaching quality and are not associated with the independently measured learning gains of students (i.e., test scores, final grades) (Uttl et al., 2017).[3] This speaks to the lack of criterion validity. Higher teaching quality should be associated with better learning outcomes for students, but across multiple studies stretching back years, there is no association that cannot be better explained by other factors. To be fair, there are scholars who find that SETs are valid and reliable. For a thorough defense of SETs as well as a historical summary of the literature see Benton & Cashin (2012).[4]

Some sources of error are easy to spot. As a faculty member, there are a number of things I can do to influence my evaluations and disrupt validity and reliability. Since SETs scores are associated with the grades students perceive they will receive (e.g., Boring et al., 2016),[5] guaranteeing everyone a final grade of A in my class will likely increase my SETs scores and my chances at tenure and promotion. I could time an email reminder to complete SETs with releasing high grades for a major assignment to boost my evaluation scores. On the other hand, student evaluations might be coincidentally timed with poor grades or difficult assignments that will bias student evaluations downward. Students may also infer I am manipulating them and give me lower SET scores as a result. To maximize my SET scores and chances and promotion, I also need to select which courses I teach carefully. Classes that are more quantitatively oriented generally receive lower ratings than more qualitative and humanities-driven classes, which makes my decision to teach social work research a poor strategy (Uttl & Smibert, 2017).[6] The only manipulative strategy I will admit to using is bringing food (usually cookies or donuts) to class during the period in which students are completing evaluations. I can systematically bias scores in a positive direction by feeding students nice treats and telling them how much I appreciated them before handing out the student evaluation forms.

As a white cis-gender male educator, I am adversely impacted by SETs because of their sketchy validity, reliability, and methodology. The other flaws with student evaluations actually help me while disadvantaging teachers from oppressed groups. Heffernan (2021)[7] provides a comprehensive overview of the sexism, racism, ableism, and prejudice baked into student evaluations:

“In all studies relating to gender, the analyses indicate that the highest scores are awarded in subjects filled with young, white, male students being taught by white English first language speaking, able-bodied, male academics who are neither too young nor too old (approx. 35–50 years of age), and who the students believe are heterosexual. Most deviations from this scenario in terms of student and academic demographics equates to lower SET scores. These studies thus highlight that white, able-bodied, heterosexual, men of a certain age are not only the least affected, they benefit from the practice. When every demographic group who does not fit this image is significantly disadvantaged by SETs, these processes serve to further enhance the position of the already privileged” (p. 5).

The staggering consistency of studies examining prejudice in SETs has led to some rather superficial reforms like reminding students to not submit racist or sexist responses in the written instructions given before SETs. Yet, even though we know that SETs are systematically biased against women, people of color, and people with disabilities, the overwhelming majority of universities in the United States continue to use them to evaluate faculty for promotion or reappointment. From a critical perspective, it is worth considering why university administrators continue to use such a biased and flawed instrument. SETs produce data that make it easy to compare faculty to one another and track faculty members over time. Furthermore, they offer students a direct opportunity to voice their concerns and highlight what went well.

Teaching quality or effectiveness is not a well-behaved variable. Bias can explain a lot of the variance produced by the measurement model, and conclusions drawn from SETs should include this limitation. For example, if we were interested in testing the impact of using a free textbook on student learning outcomes, we might use SETs as a measure of teaching effectiveness. Free textbooks might make teachers more effective because students can access the book without a paywall (see Hilton, YEAR for more on the access hypothesis). Using SETs as our dependent variable to brings these limitations to the conclusions we draw about the theoretical concept of teaching effectiveness. Similarly, tenure and promotion schemes that require a minimum average score across SETs would bring the errors associated with SETs into hiring decisions.

Consider the risks: Incomplete & flawed measures

As the people with the greatest knowledge about what happened in the classroom as whether it met their expectations, providing students with open-ended questions is the most productive part of SETs. Personally, I have found focus groups written, facilitated, and analyzed by student researchers to be more insightful than SETs. MSW student activists and leaders may look for ways to evaluate faculty that are more methodologically sound and less systematically biased, creating institutional change by replacing or augmenting traditional SETs in their department. There is very rarely student input on the criteria and methodology for teaching evaluations, yet students are the most impacted by helpful or harmful teaching practices.

Students should fight for better assessment in the classroom because well-designed assessments provide documentation to support more effective teaching practices and discourage unhelpful or discriminatory practices. Flawed assessments like SETs, can lead to a lack of information about problems with courses, instructors, or other aspects of the program. Think critically about what data your program uses to gauge its effectiveness. How might you introduce areas of student concern into how your program evaluates itself? Are there issues with food or housing insecurity, mentorship of nontraditional and first generation students, or other issues that faculty should consider when they evaluate their program? Finally, as you transition into practice, think about how your agency measures its impact and how it privileges or excludes client and community voices in the assessment process.

Let’s consider an example from social work practice. Let’s say you work for a mental health organization that serves youth impacted by community violence. How should you measure the impact of your services on your clients and their community? Schools may be interested in reducing truancy, self-injury, or other behavioral concerns. However, by centering delinquent behaviors in how we measure our impact, we may be inattentive to the role of trauma, family dynamics, and other cognitive and social processes beyond “delinquent behavior.” Indeed, we may bias our interventions by focusing on things that are not as important to clients’ needs. Social workers want to make sure their programs are improving over time, and we rely on our measures to indicate what to change and what to keep. If our measures present a partial or flawed view, we lose our ability to establish and act on scientific truths.

SETs are important to me a a faculty member. For students, you may be interested in similar arguments against the standard grading scale (A-F), and why grades (numerical, letter, etc.) do not do a good job of measuring learning. Think critically about the role that grades play in your life as a student, your self-concept, and your relationships with teachers. Your test and grade anxiety is due in part to how your learning is measured. Those measurements end up becoming an official record of your scholarship and allow employers or funders to compare you to other scholars. The stakes for measurement are the same for participants in your research study.

After you graduate, social work licensing examinations govern entry-to-practice regulations for most clinicians. I wrote a commentary article addressing potential racial bias in social work licensing exams. If you are interested in an example of missing or flawed measures that relates to systems your social work practice is governed by (rather than SETs which govern our practice in higher education) check it out!

Key Takeaways

- Mismatches between conceptualization and measurement are often places in which bias and systemic injustice enter the research process.

- Measurement modeling is a way of foregrounding researcher’s assumptions in how they connect their conceptual definitions and operational definitions.

Exercises

- Outline the measurement model in your research study.

- Distinguish between the measures in your study and their underlying theoretical constructs.

- Assess the validity, reliability, and fairness of the measures in your operational definitions.

- Search for articles with standardized measures.

- Look at the citations in the Methods sections of journal articles

- Draft a measurement error model.

- Are there any sources of systematic error or bias that could interfere with the conclusions you want to make?

- How large do you expect your measurement error to be?

10.2 Construct validity

Learning Objectives

Learners will be able to…

- Assess the construct validity of measurement models

- Apply the specific subtypes of construct validity to the measurement model in your research study

- Critique the fairness of automatic decision-making systems that use incomplete and flawed measurement models

Diving into measurement modeling further, we will examine two measurement models. First, we will examine Maslach’s Burnout Inventory (MBI), a commonly-used standardized measure of burnout used in social work research. Following the original publication of the MBI in 1981, researchers developed new versions of the MBI for different target populations: Human Services Survey (MBI-HSS), Human Services Survey for Medical Personnel (MBI-HSS (MP)), Educators Survey (MBI-ES), General Survey (MBI-GS),[5] and General Survey for Students (MBI-GS [S]). Operationalizing social worker burnout using the MBI-HSS uses a three-dimension model for burnout : emotional exhaustion, depersonalization, and personal accomplishment. If one, instead, adopted the university and college student burnout measure, the three-dimensional model of burnout would nerf emotional exhaustion to exhaustion, depersonalization to cynicism, and professional accomplishment to professional efficacy. Students have less extreme versions of the underlying theoretical constructs of burnout (e.g., have not personally accomplished as much but feel personally efficacious about their early work).

I have used the MBI with social work students completing field practicums, and well, which would you choose for measuring MSW students? Are student field workers best measured by the MBI-GS(S) because they are students or are they practitioners best measured by the MBI-HSS because they are health services workers? The underlying theoretical framework for my study was that many social work graduate students already work in human service agencies, making the MBI-HSS more appropriate. Furthermore, if we saw the more dramatic burnout indicators on the MBI-HSS dimensions like depersonalization, we would establish that students are experiencing more intense burnout than the MBI-GS(S) anticipated with concepts like cynicism.

Thus, our research team adopted a measurement model for the burnout of social work students using the MBI-HSS which entailed these assumptions:

- The theoretical construct of social work practicum laborer burnout influences the real-world measurements obtained by the MBI-HSS.

- Burnout has three dimensions: emotional exhaustion, depersonalization, and personal accomplishment.

- Someone with higher emotional exhaustion has higher burnout (direct relationship).

- Someone with higher depersonalization has higher burnout (direct relationship).

- Someone with higher personal accomplishment has less burnout (inverse relationship).

- There is no cut score for burnout/no burnout. Conclusions can be drawn about each burnout dimension, but they do not sum into a larger score.

- The MBI is a valid, reliable, and fair measurement of burnout (with specific attention to your target population)

Second, we will analyze the Association of Social Work Boards (ASWB) examinations required in most states for licensed masters and bachelors practice, and in all state for licensed clinical social work practice. A more complete discussion of the measurement model issues in ASWB examinations can be found in a special issue of Advances in Social Work on licensure exams. Our discussion here will discuss how state boards use an applicant’s ASWB examination score to regulate licensed practice. One’s ASWB examination score measures “first-day competence” of social workers applying for a given license level (e.g., bachelors, masters, clinical). The social worker’s true competence is the latent variable that cannot be observed directly.

Examinations have a public protection purpose, so the theoretical construct of competence is assumed to be equivalent to public protection. A passing test score indicates a lower likelihood to commit ethical and legal offenses. When boards adopt ASWB exams, they adopt their measurement model for public protection. Look at the Content Outline with knowledge, skills, and abilities (KSAs) KSAs for the ASWB masters examination for a clearer sense of the assumptions that underly ASWB examinations. Pages 42-48 of ASWB’s 2024 Practice Analysis provide the exact statements (e.g., “Impact of urbanization, globalization, environmental hazards, and climate change on individuals, families, groups, organizations, and communities”) that ASWB asks practitioners rate according to their frequency and importance for safe and ethical social work practice. ASWB pays and trains subject matter experts (i.e., social workers) to write questions, evaluate them for bias, and set standards for cut scores. The measurement model that states use to assure public safety from unethical social work practice can be outlined as such:

- Public safety is equivalent to minimum competence for first-day practice.

- The theoretical construct of first-day competence influences one’s score on the ASWB examinations.

- First-day competence is composed of three to four content areas, each with a few dozen statements reflecting the knowledge, skills, and abilities of an ethical and safe social worker.

- There cut score varies based on the difficulty of the pool of items included in different test versions.

- Someone who fails the examination by a single point is incompetent to practice social work as a licensed practitioner.

- The ASWB examinations are valid, reliable, and fair measurements of social work competence.

The measurement modeling process necessarily involves making assumptions. However, these assumptions must be made explicit and tested before the resulting measurements are used. Leaving them unexamined obscures any possible mismatches between the theoretical understanding of the construct purported to be measured and its operationalization, in turn obscuring any resulting fairness-related harms. In this section we apply and extend the measurement quality concepts from Chapter 9 to address specifically aspects of fairness and social justice.

Construct validity and its subtypes

Construct validity is roughly analogous to the concept of statistical unbiasedness [30]. Establishing construct validity means demonstrating, in a variety of ways, that the measurements obtained from measurement model are accurate. Construct validity can be broken down into a number of sub-types including:

- Content validity: Does the operationalization capture all relevant aspects of the construct purported to be measured?

- Face validity: Do the measurements look plausible?

- Convergent validity: Do they correlate with other measurements of the same construct?

- Discriminant validity: Do the measurements vary in ways that suggest that the operationalization may be inadvertently capturing aspects of other constructs?

- Predictive validity: Are the measurements predictive of measurements of any relevant observable properties (and other unobservable theoretical constructs) thought to be related to the construct, but not incorporated into the operationalization?

- Hypothesis validity: Do the measurements support known hypotheses about the construct?

- Consequential validity: What are the consequences of using the measurements—including any societal impacts [40, 52].

Jacobs & Wallach (2022) emphasize that construct validity is not a yes/no box to be checked! Construct validity is always a matter of degree, supported by critical reasoning [36]. While we have used some of these validity terms before in Chapter 10 to assess measurement quality, the difference in Chapter 11 will be relating the operationalization back to the underlying theoretical concept (i.e., latent variable). That is, we are looking not only at the validity, reliability, fairness of each operational definition but how measurement error impacts the conclusions we can draw about latent variables, the theoretical constructs that are not directly measurable.

Different disciplines have different conceptualizations of construct validity, each with its own rich history. For example, in some disciplines, construct validity is considered distinct from content validity and criterion validity, while in other disciplines, content validity and criterion validity are grouped under the umbrella of construct validity. Jacobs & Wallach’s (2022) conceptualization unites traditions from political science, education, and psychology by bringing together the seven different aspects of construct validity that we describe below. They argue that each of these aspects plays a unique and important role in understanding fairness in computational systems.

Face validity

Face validity refers to the extent to which the measurements obtained from a measurement model look plausible— a “sniff test” of sorts. It is inherently limited, as it establishes a plausible link between the latent variable and the observations from a measure. Face validity is a prerequisite for establishing construct validity. If the measurements obtained from a measurement model aren’t facially valid, you can stop your analysis. That measurement model is unlikely to possess other aspects of construct validity.

Using the example from section 2.1, measurements obtained by using income as a proxy for SES would most likely possess face validity. SES and income are certainly related and, in general, a person at the high end of the income distribution (e.g., a senior hospital administrator) will have a different SES than a person at the low end (e.g., a home health worker). Although you could poke holes in that validity, and we will, there is a plausible logic connecting income and SES. Similarly, the ASWB examinations are based on knowledge, skills, and abilities that are plausibly connected to social work practice. Every seven years or so, ASWB surveys practitioners with similar KSAs, making incremental changes over time as the profession changes. The MBI-HSS is based on the International Classification of Diseases (ICD-11) definition for burnout as an occupational problem (QD85). These are facially valid measures, and they have plausible relationships to the underlying theoretical constructs.

Content validity

Content validity refers to the extent to which an operationalization wholly and fully captures the substantive nature of the construct purported to be measured. This aspect of construct validity has three sub-aspects, which we describe below.

Contestedness

The first sub-aspect relates to the construct’s contestedness. If a construct is essentially contested then it has multiple context dependent, and sometimes even conflicting, theoretical understandings. Contestedness makes it inherently hard to assess content validity: if a construct has multiple theoretical understandings, then it is unlikely that a single operationalization can wholly and fully capture its substantive nature in a meaningful fashion. Researchers must articulate which understanding is being operationalized [53] because it is often the case that unobservable theoretical constructs are essentially contested, yet we still wish to measure them.

The model for the MBI-HSS is contested. As I mentioned before, it could be clearly contested that students are best measured using the student scale (MBI-GS(S)). One might contest the content validity of my operational definition by pointing out that I might miss dimensions of cynicism or efficacy not captured by the MBI-HSS subscales of depersonalization or accomplishment. Looking deeper, there are other standardized measures and theoretical conceptualizations of burnout. [LEFT OFF HERE]

Of the models described previously, most are intended to measure unobservable theoretical constructs that are (relatively) uncontested. One possible exception is patient benefit, which can be understood in a variety of different ways. However, the understanding that is operationalized in most high-risk care management enrollment models is clearly articulated. As Obermeyer et al. explain, “[the patients] with the greatest care needs will benefit the most” from enrollment in high-risk care management programs [43].

Substantive validity

The second sub-aspect of content validity is sometimes known as substantive validity. This sub-aspect moves beyond the theoretical understanding of the construct purported to be measured and focuses on the measurement modeling process—i.e., the assumptions made when moving from abstractions to mathematics. Establishing substantive validity means demonstrating that the operationalization incorporates measurements of those—and only those—observable properties (and other unobservable theoretical constructs, if appropriate) thought to be related to the construct. For example, although a person’s income contributes to their SES, their income is by no means the only contributing factor. Wealth, education, and occupation all affect a person’s SES, as do other unobservable theoretical constructs, such as cultural capital. For instance, an artist with significant wealth but a low income should have a higher SES than would be suggested by their income alone.

As another example, COMPAS defines recidivism as “a new misdemeanor or felony arrest within two years.” By assuming that arrests are a reasonable proxy for crimes committed, COMPAS fails to account for false arrests or crimes that do not result in arrests [50]. Indeed, no computational system can ever wholly and fully capture the substantive nature of crime by using arrest data as a proxy. Similarly, high-risk care management enrollment models assume that care costs are a reasonable proxy for care needs. However, a patient’s care needs reflect their underlying health status, while their care costs reflect both their access to care and their health status.

Structural validity

Finally, establishing structural validity, the third sub-aspect of content validity, means demonstrating that the operationalization captures the structure of the relationships between the incorporated observable properties (and other unobservable theoretical constructs, if appropriate) and the construct purported to be measured, as well as the interrelationships between them [36, 40].

In addition to assuming that teacher effectiveness is wholly and fully captured by students’ test scores—a clear threat to substantive validity [2]—the EVAAS MRM assumes that a student’s test score for subject j in grade k in year l is approximately equal to the sum of the state or district’s estimated mean score for subject j in grade k in year l and the student’s current and previous teachers’ effects (weighted by the fraction of the student’s instructional time attributed to each teacher). However, this assumption ignores the fact that, for many students, the relationship may be more complex.

Convergent validity

Convergent validity refers to the extent to which the measurements obtained from a measurement model correlate with other measurements of the same construct, obtained from measurement models for which construct validity has already been established. This aspect of construct validity is typically assessed using quantitative methods, though doing so can reveal qualitative differences between different operationalizations.

We note that assessing convergent validity raises an inherent challenge: “If a new measure of some construct differs from an established measure, it is generally viewed with skepticism. If a new measure captures exactly what the previous one did, then it is probably unnecessary” [49]. The measurements obtained from a new measurement model should therefore deviate only slightly from existing measurements of the same construct. Moreover, for the model to be viewed as possessing convergent validity, these deviations must be well justified and supported by critical reasoning.

Many value-added models, including the EVAAS MRM, lack convergent validity [2]. For example, in Weapons of Math Destruction [46], O’Neil described Sarah Wysocki, a fifth-grade teacher who received a low score from a value-added model despite excellent reviews from her principal, her colleagues, and her students’ parents.

As another example, measurements of SES obtained from the model described previously and measurements of SES obtained from the National Committee on Vital and Health Statistics would likely correlate somewhat because both operationalizations incorporate income. However, the latter operationalization also incorporates measurements of other observable properties, including wealth, education, occupation, economic pressure, geographic location, and family size [45]. As a result, it is also likely that there would also be significant differences between the two sets of measurements. Investigating these differences might reveal aspects of the substantive nature of SES, such as wealth or education, that are missing from the model described in section 2.2. In other words, and as we described above, assessing convergent validity can reveal qualitative differences between different operationalizations of a construct.

We emphasize that assessing the convergent validity of a measurement model using measurements obtained from measurement models that have not been sufficiently well validated can yield a false sense of security. For example, scores obtained from COMPAS would likely correlate with scores obtained from other models that similarly use arrests as a proxy for crimes committed, thereby obscuring the threat to content validity that we described above.

Discriminant validity

Discriminant validity refers to the extent to which the measurements obtained from a measurement model vary in ways that suggest that the operationalization may be inadvertently capturing aspects of other constructs. Measurements of one construct should only correlate with measurements of another to the extent that those constructs are themselves related. As a special case, if two constructs are totally unrelated, then there should be no correlation between their measurements [25].

Establishing discriminant validity can be especially challenging when a construct has relationships with many other constructs. SES, for example, is related to almost all social and economic constructs, albeit to varying extents. For instance, SES and gender are somewhat related due to labor segregation and the persistent gender wage gap, while SES and race are much more closely related due to historical racial inequalities resulting from structural racism. When assessing the discriminant validity of the model described previously, we would therefore hope to find correlations that reflect these relationships. If, however, we instead found that the resulting measurements were perfectly correlated with gender or uncorrelated with race, this would suggest a lack of discriminant validity.

As another example, Obermeyer et al. found a strong correlation between measurements of patients’ future care needs, operationalized as future care costs, and race [43]. According to their analysis of one model, only 18% of the patients identified for enrollment in highrisk care management programs were Black. This correlation contradicts expectations. Indeed, given the enormous racial health disparities in the U.S., we might even expect to see the opposite pattern. Further investigation by Obermeyer et al. revealed that this threat to discriminant validity was caused by the confounding factor that we described in section 2.5: Black and white patients with comparable past care needs had radically different past care costs—a consequence of structural racism that was then exacerbated by the model.

Predictive validity

Predictive validity refers to the extent to which the measurements obtained from a measurement model are predictive of measurements of any relevant observable properties (and other unobservable theoretical constructs) thought to be related to the construct purported to be measured, but not incorporated into the operationalization. Assessing predictive validity is therefore distinct from out-of-sample prediction [24, 41]. Predictive validity can be assessed using either qualitative or quantitative methods. We note that in contrast to the aspects of construct validity that we discussed above, predictive validity is primarily concerned with the utility of the measurements, not their meaning.

As a simple illustration of predictive validity, taller people generally weigh more than shorter people. Measurements of a person’s height should therefore be somewhat predictive of their weight. Similarly, a person’s SES is related to many observable properties— ranging from purchasing behavior to media appearances—that are not always incorporated into models for measuring SES. Measurements obtained by using income as a proxy for SES would most likely be somewhat predictive of many of these properties, at least for people at the high and low ends of the income distribution.

We note that the relevant observable properties (and other unobservable theoretical constructs) need not be “downstream” of (i.e., thought to be influenced by) the construct. Predictive validity can also be assessed using “upstream” properties and constructs, provided that they are not incorporated into the operationalization. For example, Obermeyer et al. investigated the extent to which measurements of patients’ future care needs, operationalized as future care costs, were predictive of patients’ health statuses (which were not part of the model that they analyzed) [43]. They found that Black and white patients with comparable future care costs did not have comparable health statuses—a threat to predictive validity caused (again) by the confounding factor described previously.

Hypothesis validity

Hypothesis validity refers to the extent to which the measurements obtained from a measurement model support substantively interesting hypotheses about the construct purported to be measured. Much like predictive validity, hypothesis validity is primarily concerned with the utility of the measurements. We note that the main distinction between predictive validity and hypothesis validity hinges on the definition of “substantively interesting hypotheses.” As a result, the distinction is not always clear cut. For example, is the hypothesis “People with higher SES are more likely to be mentioned in the New York Times” sufficiently substantively interesting? Or would it be more appropriate to use the hypothesized relationship to assess predictive validity? For this reason, some traditions merge predictive and hypothesis validity [e.g., 30].

Turning again to the value-added models discussed previously, it is extremely unlikely that the dramatically variable scores obtained from such models would support most substantively interesting hypotheses involving teacher effectiveness, again suggesting a possible mismatch between the theoretical understanding of the construct purported to be measured and its operationalization.

Using income as a proxy for SES would likely support some— though not all—substantively interesting hypotheses involving SES. For example, many social scientists have studied the relationship between SES and health outcomes, demonstrating that people with lower SES tend to have worse health outcomes. Measurements of SES obtained from the model described previously would likely support this hypothesis, albeit with some notable exceptions. For instance, wealthy college students often have low incomes but good access to healthcare. Combined with their young age, this means that they typically have better health outcomes than other people with comparable incomes. Examining these exceptions might reveal aspects of the substantive nature of SES, such as wealth and education, that are missing from the model described previously.

Consequential validity

Consequential validity, the final aspect in our fairness-oriented conceptualization of construct validity, is concerned with identifying and evaluating the consequences of using the measurements obtained from a measurement model, including any societal impacts. Assessing consequential validity often reveals fairness-related harms. Consequential validity was first introduced by Messick, who argued that the consequences of using the measurements obtained from a measurement model are fundamental to establishing construct validity [40]. This is because the values that are reflected in those consequences both derive from and contribute back the theoretical understanding of the construct purported to be measured. In other words, the “measurements both reflect structure in the natural world, and impose structure upon it,” [26]—i.e., the measurements shape the ways that we understand the construct itself. Assessing consequential validity therefore means answering the following questions: How is the world shaped by using the measurements? What world do we wish to live in? If there are contexts in which the consequences of using the measurements would cause us to compromise values that we wish to uphold, then the measurements should not be used in those contexts.

For example, when designing a kitchen, we might use measurements of a person’s standing height to determine the height at which to place their kitchen countertop. However, this may render the countertop inaccessible to them if they use a wheelchair. As another example, because the Universal Credit benefits system described previously assumed that measuring a person’s monthly income by totaling the wages deposited into their account over a single one-month period would yield error-free measurements, many people—especially those with irregular pay schedules— received substantially lower benefits than they were entitled to.

The consequences of using scores obtained from value-added models are well described in the literature on fairness in measurement. Many school districts have used such scores to make decisions about resource distribution and even teachers’ continued employment, often without any way to contest these decisions [2, 3]. In turn, this has caused schools to manipulate their scores and encouraged teachers to “teach to the test,” instead of designing more diverse and substantive curricula [46]. As well as the cases described above in sections 3.1.1 and 3.2.3, in which teachers were fired on the basis of low scores despite evidence suggesting that their scores might be inaccurate, Amrein-Beardsley and Geiger [3] found that EVAAS consistently gave lower scores to teachers at schools with higher proportions of non-white students, students receiving special education services, lower-SES students, and English language learners. Although it is possible that more effective teachers simply chose not to teach at those schools, it is far more likely that these lower scores reflect societal biases and structural inequalities. When scores obtained from value-added models are used to make decisions about resource distribution and teachers’ continued employment, these biases and inequalities are then exacerbated.

The consequences of using scores obtained from COMPAS are also well described in the literature on fairness in computational systems, most notably by Angwin et al. [4], who showed that COMPAS incorrectly scored Black defendants as high risk more often than white defendants, while incorrectly scoring white defendants as low risk more often than Black defendants. By defining recidivism as “a new misdemeanor or felony arrest within two years,” COMPAS fails to account for false arrests or crimes that do not result in arrests. This assumption therefore encodes and exacerbates racist policing practices, leading to the racial disparities uncovered by Angwin et al. Indeed, by using arrests as a proxy for crimes committed, COMPAS can only exacerbate racist policing practices, rather than transcending them [7, 13, 23, 37, 39]. Furthermore, the COMPAS documentation asserts that “the COMPAS risk scales are actuarial risk assessment instruments. Actuarial risk assessment is an objective method of estimating the likelihood of reoffending. An individual’s level of risk is estimated based on known recidivism rates of offenders with similar characteristics” [19]. By describing COMPAS as an “objective method,” Northpointe misrepresents the measurement modeling process, which necessarily involves making assumptions and is thus never objective. Worse yet, the label of objectiveness obscures the organizational, political, societal, and cultural values that are embedded in COMPAS and reflected in its consequences.

Finally, we return to the high-risk care management models described in section 2.5. By operationalizing greatest care needs as greatest care costs, these models fail to account for the fact that patients with comparable past care needs but different access to care will likely have different past care costs. This omission has the greatest impact on Black patients. Indeed, when analyzing one such model, Obermeyer et al. found that only 18% of the patients identified for enrollment were Black [43]. In addition, Obermeyer et al. found that Black and white patients with comparable future care costs did not have comparable health statuses. In other words, these models exacerbate the enormous racial health disparities in the U.S. as a consequence of a seemingly innocuous assumption.

Positionality & data equity

Because measurement modeling is often skipped over, researchers and practitioners may be inclined to collapse the distinctions between constructs and their operationalizations in how they talk about, think about, and study the concepts in their research question. But collapsing these distinctions removes opportunities to anticipate and mitigate fairness-related harms by eliding the space in which they are most often introduced. Further compounding this issue is the fact that measurements of unobservable theoretical constructs are often treated as if they were obtained directly and without errors—i.e., a source of ground truth. Measurements end up standing in for the constructs purported to be measured, normalizing the assumptions made during the measurement modeling process and embedding them throughout society. In other words, “measures are more than a creation of society, they create society.” [1]. Collapsing the distinctions between constructs and their operationalizations is therefore not just theoretically or pedantically concerning—it is practically concerning with very real, fairness-related consequences.

How we decide to measure what we are researching is influenced by our backgrounds, including our culture, implicit biases, and individual experiences. For me as a middle-class, cisgender white woman, the decisions I make about measurement will probably default to ones that make the most sense to me and others like me, and thus measure characteristics about us most accurately if I don’t think carefully about it. There are major implications for research here because this could affect the validity of my measurements for other populations.

This doesn’t mean that standardized scales or indices, for instance, won’t work for diverse groups of people. What it means is that researchers must not ignore difference in deciding how to measure a variable in their research. Doing so may serve to push already marginalized people further into the margins of academic research and, consequently, social work intervention. Social work researchers, with our strong orientation toward celebrating difference and working for social justice, are obligated to keep this in mind for ourselves and encourage others to think about it in their research, too.

This involves reflecting on what we are measuring, how we are measuring, and why we are measuring. Do we have biases that impacted how we operationalized our concepts? Did we include stakeholders and gatekeepers in the development of our concepts? This can be a way to gain access to vulnerable populations. What feedback did we receive on our measurement process and how was it incorporated into our work? These are all questions we should ask as we are thinking about measurement. Further, engaging in this intentionally reflective process will help us maximize the chances that our measurement will be accurate and as free from bias as possible.

How we decide to measure our variables determines what kind of data we end up with in our research project. Because scientific processes are a part of our sociocultural context, the same biases and oppressions we see in the real world can be manifested or even magnified in research data. Jagadish and colleagues (2021)[1] presents four dimensions of data equity that are relevant to consider: in representation of non-dominant groups within data sets; in how data is collected, analyzed, and combined across datasets; in equitable and participatory access to data, and finally in the outcomes associated with the data collection. Historically, we have mostly focused on the outcomes of measures producing outcomes that are biased in one way or another, and this section reviews many such examples. However, it is important to note that equity must also come from designing measures that respond to questions like:

- Are groups historically suppressed from the data record represented in the sample?

- Are equity data gathered by researchers and used to uncover and quantify inequity?

- Are the data accessible across domains and levels of expertise, and can community members participate in the design, collection, and analysis of the public data record?

- Are the data collected used to monitor and mitigate inequitable impacts?

So, it’s not just about whether measures work for one population for another. Data equity is about the context in which data are created from how we measure people and things. We agree with these authors that data equity should be considered within the context of automated decision-making systems and recognizing a broader literature around the role of administrative systems in creating and reinforcing discrimination. To combat the inequitable processes and outcomes we describe below, researchers must foreground equity as a core component of measurement.

[insert content on how algorithms are black boxes for a reason since they foreclose a lot of these considerations]

Key Takeaways

- Mismatches between conceptualization and measurement are often places in which bias and systemic injustice enter the research process.

- Measurement modeling is a way of foregrounding researcher’s assumptions in how they connect their conceptual definitions and operational definitions.

- Social work research consumers should critically evaluate the construct validity and reliability of measures in the studies of social work populations.

Exercises

- Examine an article that uses quantitative methods to investigate your topic area.

- Identify the conceptual definitions the authors used.

- These are usually in the introduction section.

- Identify the operational definitions the authors used.

- These are usually in the methods section in a subsection titled measures.

- List the assumptions that link the conceptual and operational definitions.

- For example, that attendance can be measured by a classroom sign-in sheet.

- Do the authors identify any limitations for their operational definitions (measures) in the limitations or methods section?

- Do you identify any limitations in how the authors operationalized their variables?

- Apply the specific subtypes of construct validity and reliability.

10.3 Post-positivism: The assumptions of quantitative methods

Learning Objectives

Learners will be able to…

- Ground your research project and working question in the philosophical assumptions of social science

- Define the terms ‘ontology‘ and ‘epistemology‘ and explain how they relate to quantitative and qualitative research methods

- Apply feminist, anti-racist, and decolonization critiques of social science to your project

- Define axiology and describe the axiological assumptions of research projects

Measurement modeling itself relies on a number of underlying assumptions about truth, discovery, and power that shape the research process. These assumptions are easy to overlook, but they are crucial for understanding how quantitative methods have adapted over time to become more robust against invalid, unreliable, and unfair measures.

What are your assumptions?

Social workers must understand measurement theory to engage in social justice work. That’s because measurement theory and its supporting philosophical assumptions will help sharpen your perceptions of the social world. They help social workers build heuristics that can help identify the fundamental assumptions at the heart of social conflict and social problems. They alert you to the patterns in the underlying assumptions that different people make and how those assumptions shape their worldview, what they view as true, and what they hope to accomplish. In the next section, we will review feminist and other critical perspectives on research, and they should help inform you of how assumptions about research can reinforce oppression.

Understanding these deeper structures behind research evidence is a true gift of social work research. Because we acknowledge the usefulness and truth value of multiple philosophies and worldviews contained in this chapter, we can arrive at a deeper and more nuanced understanding of the social world.

Building your ice float

Before we can dive into philosophy, we need to recall out conversation from Chapter 1 about objective truth and subjective truths. Let’s test your knowledge with a quick example. Is crime on the rise in the United States? A recent Five Thirty Eight article highlights the disparity between historical trends on crime that are at or near their lowest in the thirty years with broad perceptions by the public that crime is on the rise (Koerth & Thomson-DeVeaux, 2020).[8] Social workers skilled at research can marshal objective truth through statistics, much like the authors do, to demonstrate that people’s perceptions are not based on a rational interpretation of the world. Of course, that is not where our work ends. Subjective truths might decenter this narrative of ever-increasing crime, deconstruct its racist and oppressive origins, or simply document how that narrative shapes how individuals and communities conceptualize their world.

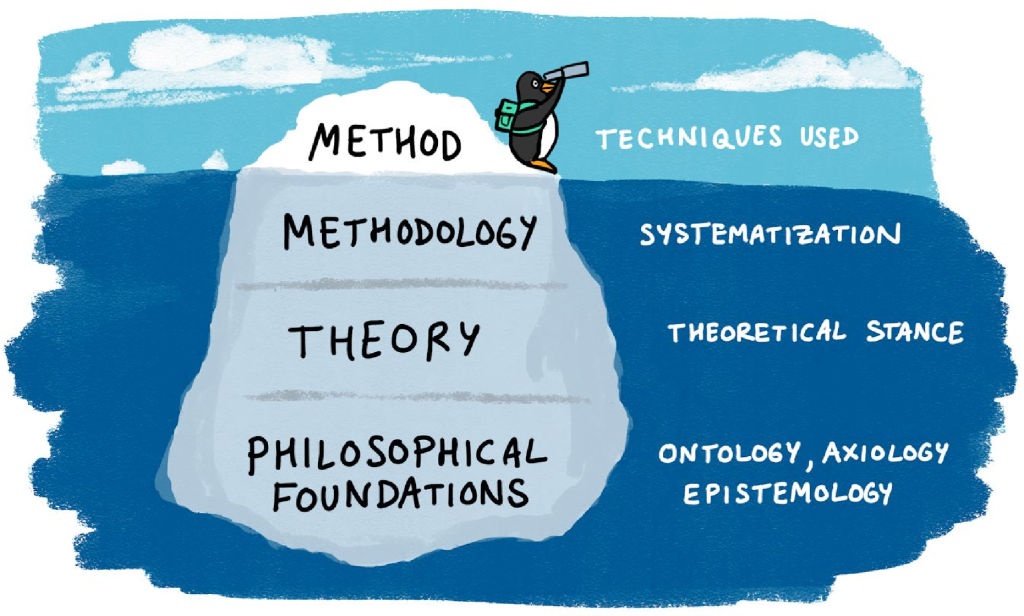

Objective does not mean right, and subjective does not mean wrong. Researchers must understand what kind of truth they are searching for so they can choose a theoretical framework, methodology, and research question that matches. As we discussed in Chapter 1, researchers seeking objective truth (one of the philosophical foundations at the bottom of Figure 7.1) often employ quantitative methods (one of the methods at the top of Figure 7.1). Similarly, researchers seeking subjective truths (again, at the bottom of Figure 7.1) often employ qualitative methods (at the top of Figure 7.1). This chapter is about the connective tissue, and by the time you are done reading, you should have a first draft of a theoretical and philosophical (a.k.a. paradigmatic) framework for your study.

Ontology: Assumptions about what is real & true

In section 1.2, we reviewed the two types of truth that social work researchers seek—objective truth and subjective truths —and linked these with the methods—quantitative and qualitative—that researchers use to study the world. If those ideas aren’t fresh in your mind, you may want to navigate back to that section for an introduction.

These two types of truth rely on different assumptions about what is real in the social world—i.e., they have a different ontology. Ontology refers to the study of being (literally, it means “rational discourse about being”). In philosophy, basic questions about existence are typically posed as ontological, e.g.:

- What is there?

- What types of things are there?

- How can we describe existence?

- What kind of categories can things go into?

- Are the categories of existence hierarchical?

Objective vs. subjective ontologies

At first, it may seem silly to question whether the phenomena we encounter in the social world are real. Of course you exist, your thoughts exist, your computer exists, and your friends exist. You can see them with your eyes. This is the ontological framework of realism, which simply means that the concepts we talk about in science exist independent of observation (Burrell & Morgan, 1979).[9] Obviously, when we close our eyes, the universe does not disappear. You may be familiar with the philosophical conundrum: “If a tree falls in a forest and no one is around to hear it, does it make a sound?”

The natural sciences, like physics and biology, also generally rely on the assumption of realism. Lone trees falling make a sound. We assume that gravity and the rest of physics are there, even when no one is there to observe them. Mitochondria are easy to spot with a powerful microscope, and we can observe and theorize about their function in a cell. The gravitational force is invisible, but clearly apparent from observable facts, such as watching an apple fall from a tree. Of course, out theories about gravity have changed over the years. Improvements were made when observations could not be correctly explained using existing theories and new theories emerged that provided a better explanation of the data.

As we discussed in section 1.2, culture-bound syndromes are an excellent example of where you might come to question realism. Of course, from a Western perspective as researchers in the United States, we think that the Diagnostic and Statistical Manual (DSM) classification of mental health disorders is real and that these culture-bound syndromes are aberrations from the norm. But what about if you were a person from Korea experiencing Hwabyeong? Wouldn’t you consider the Western diagnosis of somatization disorder to be incorrect or incomplete? This conflict raises the question–do either Hwabyeong or DSM diagnoses like post-traumatic stress disorder (PTSD) really exist at all…or are they just social constructs that only exist in our minds?

If your answer is “no, they do not exist,” you are adopting the ontology of anti-realism (or relativism), or the idea that social concepts do not exist outside of human thought. Unlike the realists who seek a single, universal truth, the anti-realists perceive a sea of truths, created and shared within a social and cultural context. Unlike objective truth, which is true for all, subjective truths will vary based on who you are observing and the context in which you are observing them. The beliefs, opinions, and preferences of people are actually truths that social scientists measure and describe. Additionally, subjective truths do not exist independent of human observation because they are the product of the human mind. We negotiate what is true in the social world through language, arriving at a consensus and engaging in debate within our socio-cultural context.

These theoretical assumptions should sound familiar if you’ve studied social constructivism or symbolic interactionism in your other MSW courses, most likely in human behavior in the social environment (HBSE).[10] From an anti-realist perspective, what distinguishes the social sciences from natural sciences is human thought. When we try to conceptualize trauma from an anti-realist perspective, we must pay attention to the feelings, opinions, and stories in people’s minds. In their most radical formulations, anti-realists propose that these feelings and stories are all that truly exist.

What happens when a situation is incorrectly interpreted? Certainly, who is correct about what is a bit subjective. It depends on who you ask. Even if you can determine whether a person is actually incorrect, they think they are right. Thus, what may not be objectively true for everyone is nevertheless true to the individual interpreting the situation. Furthermore, they act on the assumption that they are right. We all do. Much of our behaviors and interactions are a manifestation of our personal subjective truth. In this sense, even incorrect interpretations are truths, even though they are true only to one person or a group of misinformed people. This leads us to question whether the social concepts we think about really exist. For researchers using subjective ontologies, they might only exist in our minds; whereas, researchers who use objective ontologies which assume these concepts exist independent of thought.

How do we resolve this dichotomy? As social workers, we know that often times what appears to be an either/or situation is actually a both/and situation. Let’s take the example of trauma. There is clearly an objective thing called trauma. We can draw out objective facts about trauma and how it interacts with other concepts in the social world such as family relationships and mental health. However, that understanding is always bound within a specific cultural and historical context. Moreover, each person’s individual experience and conceptualization of trauma is also true. Much like a client who tells you their truth through their stories and reflections, when a participant in a research study tells you what their trauma means to them, it is real even though only they experience and know it that way. By using both objective and subjective analytic lenses, we can explore different aspects of trauma—what it means to everyone, always, everywhere, and what is means to one person or group of people, in a specific place and time.

Epistemology: Assumptions about how we know things

Having discussed what is true, we can proceed to the next natural question—how can we come to know what is real and true? This is epistemology. Epistemology is derived from the Ancient Greek epistēmē which refers to systematic or reliable knowledge (as opposed to doxa, or “belief”). Basically, it means “rational discourse about knowledge,” and the focus is the study of knowledge and methods used to generate knowledge. Epistemology has a history as long as philosophy, and lies at the foundation of both scientific and philosophical knowledge.

Epistemological questions include:

- What is knowledge?

- How can we claim to know anything at all?

- What does it mean to know something?

- What makes a belief justified?

- What is the relationship between the knower and what can be known?

While these philosophical questions can seem far removed from real-world interaction, thinking about these kinds of questions in the context of research helps you target your inquiry by informing your methods and helping you revise your working question. Epistemology is closely connected to method as they are both concerned with how to create and validate knowledge. Research methods are essentially epistemologies – by following a certain process we support our claim to know about the things we have been researching. Inappropriate or poorly followed methods can undermine claims to have produced new knowledge or discovered a new truth. This can have implications for future studies that build on the data and/or conceptual framework used.

Research methods can be thought of as essentially stripped down, purpose-specific epistemologies. The knowledge claims that underlie the results of surveys, focus groups, and other common research designs ultimately rest on epistemological assumptions of their methods. Focus groups and other qualitative methods usually rely on subjective epistemological (and ontological) assumptions. Surveys and and other quantitative methods usually rely on objective epistemological assumptions. These epistemological assumptions often entail congruent subjective or objective ontological assumptions about the ultimate questions about reality.

Objective vs. subjective epistemologies

One key consideration here is the status of ‘truth’ within a particular epistemology or research method. If, for instance, some approaches emphasize subjective knowledge and deny the possibility of an objective truth, what does this mean for choosing a research method?

We began to answer this question in Chapter 1 when we described the scientific method and objective and subjective truths. Epistemological subjectivism focuses on what people think and feel about a situation, while epistemological objectivism focuses on objective facts irrelevant to our interpretation of a situation (Lin, 2015).[11]

While there are many important questions about epistemology to ask (e.g., “How can I be sure of what I know?” or “What can I not know?” see Willis, 2007[12] for more), from a pragmatic perspective most relevant epistemological question in the social sciences is whether truth is better accessed using numerical data or words and performances. Generally, scientists approaching research with an objective epistemology (and realist ontology) will use quantitative methods to arrive at scientific truth. Quantitative methods examine numerical data to precisely describe and predict elements of the social world. For example, while people can have different definitions for poverty, an objective measurement such as an annual income of “less than $25,100 for a family of four” provides a precise measurement that can be compared to incomes from all other people in any society from any time period, and refers to real quantities of money that exist in the world. Mathematical relationships are uniquely useful in that they allow comparisons across individuals as well as time and space. In this book, we will review the most common designs used in quantitative research: surveys and experiments. These types of studies usually rely on the epistemological assumption that mathematics can represent the phenomena and relationships we observe in the social world.

Although mathematical relationships are useful, they are limited in what they can tell you. While you can learn use quantitative methods to measure individuals’ experiences and thought processes, you will miss the story behind the numbers. To analyze stories scientifically, we need to examine their expression in interviews, journal entries, performances, and other cultural artifacts using qualitative methods. Because social science studies human interaction and the reality we all create and share in our heads, subjectivists focus on language and other ways we communicate our inner experience. Qualitative methods allow us to scientifically investigate language and other forms of expression—to pursue research questions that explore the words people write and speak. This is consistent with epistemological subjectivism’s focus on individual and shared experiences, interpretations, and stories.

It is important to note that qualitative methods are entirely compatible with seeking objective truth. Approaching qualitative analysis with a more objective perspective, we look simply at what was said and examine its surface-level meaning. If a person says they brought their kids to school that day, then that is what is true. A researcher seeking subjective truth may focus on how the person says the words—their tone of voice, facial expressions, metaphors, and so forth. By focusing on these things, the researcher can understand what it meant to the person to say they dropped their kids off at school. Perhaps in describing dropping their children off at school, the person thought of their parents doing the same thing or tried to understand why their kid didn’t wave back to them as they left the car. In this way, subjective truths are deeper, more personalized, and difficult to generalize.

Self-determination and free will

When scientists observe social phenomena, they often take the perspective of determinism, meaning that what is seen is the result of processes that occurred earlier in time (i.e., cause and effect). This process is represented in the classical formulation of a research question which asks “what is the relationship between X (cause) and Y (effect)?” By framing a research question in such a way, the scientist is disregarding any reciprocal influence that Y has on X. Moreover, the scientist also excludes human agency from the equation. It is simply that a cause will necessitate an effect. For example, a researcher might find that few people living in neighborhoods with higher rates of poverty graduate from high school, and thus conclude that poverty causes adolescents to drop out of school. This conclusion, however, does not address the story behind the numbers. Each person who is counted as graduating or dropping out has a unique story of why they made the choices they did. Perhaps they had a mentor or parent that helped them succeed. Perhaps they faced the choice between employment to support family members or continuing in school.

For this reason, determinism is critiqued as reductionistic in the social sciences because people have agency over their actions. This is unlike the natural sciences like physics. While a table isn’t aware of the friction it has with the floor, parents and children are likely aware of the friction in their relationships and act based on how they interpret that conflict. The opposite of determinism is free will, that humans can choose how they act and their behavior and thoughts are not solely determined by what happened prior in a neat, cause-and-effect relationship. Researchers adopting a perspective of free will view the process of, continuing with our education example, seeking higher education as the result of a number of mutually influencing forces and the spontaneous and implicit processes of human thought. For these researchers, the picture painted by determinism is too simplistic.

A similar dichotomy can be found in the debate between individualism and holism. When you hear something like “the disease model of addiction leads to policies that pathologize and oppress people who use drugs,” the speaker is making a methodologically holistic argument. They are making a claim that abstract social forces (the disease model, policies) can cause things to change. A methodological individualist would critique this argument by saying that the disease model of addiction doesn’t actually cause anything by itself. From this perspective, it is the individuals, rather than any abstract social force, who oppress people who use drugs. The disease model itself doesn’t cause anything to change; the individuals who follow the precepts of the disease model are the agents who actually oppress people in reality. To an individualist, all social phenomena are the result of individual human action and agency. To a holist, social forces can determine outcomes for individuals without individuals playing a causal role, undercutting free will and research projects that seek to maximize human agency.

Exercises

- Examine an article from your literature review

- Is human action, or free will, informing how the authors think about the people in their study?

- Or are humans more passive and what happens to them more determined by the social forces that influence their life?

- Reflect on how this project’s assumptions may differ from your own assumptions about free will and determinism. For example, my beliefs about self-determination and free will always inform my social work practice. However, my working question and research project may rely on social theories that are deterministic and do not address human agency.

Radical change